A Comprehensive Guide to Systematic Review Methods in Ecotoxicology: From Foundations to Advanced Applications

This article provides a comprehensive guide to conducting systematic reviews in ecotoxicology, a field dedicated to understanding the effects of toxic chemicals on populations, communities, and ecosystems.

A Comprehensive Guide to Systematic Review Methods in Ecotoxicology: From Foundations to Advanced Applications

Abstract

This article provides a comprehensive guide to conducting systematic reviews in ecotoxicology, a field dedicated to understanding the effects of toxic chemicals on populations, communities, and ecosystems. Tailored for researchers, scientists, and environmental risk assessors, it covers the entire process from formulating a structured research question to interpreting and applying findings. The scope includes foundational principles adapted from healthcare, practical methodological steps for searching and appraising diverse ecotoxicological studies, strategies to overcome common challenges like heterogeneous data, and an exploration of cutting-edge digital tools and validation frameworks. This resource aims to enhance the rigor, transparency, and impact of evidence synthesis in environmental science.

Systematic Reviews in Ecotoxicology: Establishing the Framework for Robust Evidence Synthesis

Within ecotoxicology, the ability to accurately synthesize evidence regarding the effects of environmental contaminants is paramount for robust risk assessments and regulatory decisions. The methodologies employed for evidence synthesis—typically either traditional literature reviews or systematic reviews—differ profoundly in their rigor, objectivity, and reliability. A traditional literature review often provides a general overview of a topic, but its narrative approach can be susceptible to selection and confirmation bias, as it does not usually apply rigorous, pre-specified methods [1] [2]. In contrast, a systematic review is a scholarly method that uses explicit, pre-specified plans to minimize bias, systematically identify, appraise, and synthesize all relevant empirical evidence on a specific, focused research question [3]. This application note details the key distinctions between these approaches and provides a structured protocol for conducting systematic reviews within ecotoxicological research.

Core Differences: Systematic vs. Traditional Literature Reviews

The fundamental differences between these two review types lie in their goals, methodology, and resultant output. The table below provides a structured comparison.

Table 1: A Comparative Overview of Traditional Literature Reviews and Systematic Reviews

| Feature | Traditional (Narrative) Literature Review | Systematic Review |

|---|---|---|

| Review Question | Broad, descriptive, provides background or context [1] [4]. | Specific, focused, often based on a framework like PICO/PECO to answer a clinical or evidence-based question [1] [2]. |

| Planning & Protocol | Less formal planning; a predefined protocol is typically absent [2]. | Extensive planning with a pre-specified, registered protocol defining the methods before starting [1] [5]. |

| Search Strategy | Search may not be comprehensive or exhaustive; often limited to specific databases [2]. | A highly sensitive, exhaustive search across multiple databases and grey literature, with a documented, reproducible strategy [1] [2]. |

| Study Selection | Criteria not always explicit; selection can be subjective and prone to bias [2]. | Uses explicit, pre-defined eligibility criteria (inclusion/exclusion); typically involves dual independent review to minimize bias [1] [3]. |

| Quality Assessment | Critical appraisal of individual studies is not always performed [2]. | Rigorous critical appraisal of the validity and risk of bias of each included study is a mandatory step [3] [2]. |

| Evidence Synthesis | Typically a narrative, qualitative summary and discussion [1] [2]. | Systematic presentation, often involving qualitative synthesis and potentially a quantitative meta-analysis [1] [3]. |

| Results & Conclusions | Conclusions may be influenced by the author's views and are not always directly tied to all available evidence [1]. | Results are based directly on the evidence; conclusions are structured, and the certainty of the evidence is often graded (e.g., using GRADE) [2] [6]. |

| Primary Objective | To provide context, demonstrate understanding, or introduce new research [1]. | To produce an unbiased, reliable summary of evidence to inform decision-making [2]. |

For ecotoxicology, the PICO framework is often adapted to PECO (Population, Exposure, Comparator, Outcome), which is specifically designed for environmental questions, such as evaluating the effect of a specific chemical (Exposure) on a particular species (Population) compared to a control (Comparator) for a defined endpoint like survival or reproduction (Outcome) [5].

Experimental Protocol: Conducting a Systematic Review

The following section provides a detailed, step-by-step protocol for conducting a high-quality systematic review, adaptable to ecotoxicological research.

Phase 1: Planning and Protocol Development

- Formulate the Review Question: Define a focused, answerable research question. The PECO framework is highly recommended for ecotoxicology.

- Example PECO Question: "What is the effect of glyphosate-based herbicides [Exposure] on the larval development of Xenopus laevis [Population] compared to untreated controls [Comparator] on the outcomes of mortality and malformation rate [Outcome]?"

- Develop & Register the Protocol: Create a detailed research plan that specifies every stage of the review process. This protocol should be registered on a platform like PROSPERO to reduce duplication and bias [2]. The protocol must include:

- Background and objectives.

- Explicit PECO criteria.

- A comprehensive search strategy (databases, search terms, limits).

- Data extraction and management plans.

- Methods for risk of bias assessment and data synthesis.

Phase 2: Evidence Identification

- Search Strategy: Develop a sensitive search strategy to maximize recall.

- Databases: Search multiple relevant databases (e.g., Web of Science, Scopus, PubMed, Environment Complete, TOXLINE).

- Search Terms: Identify key concepts from the PECO question. Use both free-text terms and controlled vocabulary (e.g., MeSH, Emtree) specific to each database. Combine terms using Boolean operators (AND, OR) [2].

- Grey Literature: Include searches of governmental reports, dissertations, conference proceedings, and pre-print servers to mitigate publication bias [2].

- Study Selection:

- Use reference management software to deduplicate records.

- Screen titles and abstracts against the pre-defined eligibility criteria.

- Retrieve and assess the full text of potentially relevant studies.

- This process should be performed by at least two independent reviewers to ensure reliability. Disagreements are resolved through consensus or a third reviewer [3].

The workflow for the evidence identification and synthesis phases is a critical path that ensures methodological rigor.

Phase 3: Evidence Evaluation and Synthesis

- Data Extraction: Extract relevant data from included studies into a standardized form. Data points typically include:

- Study identifiers and characteristics (author, year, location).

- PECO elements (population details, exposure regimen, comparator, outcomes measured).

- Key results (e.g., mean, standard deviation, sample size, effect size estimates).

- Data extraction should also be performed in duplicate to prevent errors [3].

- Risk of Bias Assessment: Critically appraise the methodological quality of each included study using an appropriate, validated tool. For animal and ecotoxicological studies, tools like SYRCLE's risk of bias tool or the ECOTOXicology knowledgebase guidance are relevant [5]. This step is also conducted by two independent reviewers.

- Data Synthesis:

- Qualitative Synthesis: Summarize the findings in a narrative form, often structured around the outcomes and the strength of the evidence.

- Quantitative Synthesis (Meta-Analysis): If the studies are sufficiently homogeneous, statistically combine the results to produce an overall summary effect estimate. This involves using software (e.g., RevMan) to generate forest plots and statistical measures [3].

- Summary of Findings Table: Create a clear, transparent table summarizing the main results. This table should include, for each critical outcome, the number of studies, the overall certainty of the evidence (e.g., using the GRADE approach), and the summary effect [6].

The Scientist's Toolkit: Essential Reagents for a Systematic Review

Systematic reviewing requires specialized "research reagents" in the form of software tools and methodological resources. The following table details key solutions for the modern evidence synthesis scientist.

Table 2: Key Research Reagent Solutions for Conducting a Systematic Review

| Tool / Resource | Function | Example Solutions |

|---|---|---|

| Protocol Registration | Publicly records review plan to prevent duplication and bias. | PROSPERO, Open Science Framework (OSF) |

| Reference Management | Stores, deduplicates, and manages search results. | Covidence [1], Rayyan, DistillerSR [6] |

| Dual Screening & Data Extraction | Platforms that facilitate independent review and consensus. | Covidence [1], DistillerSR [2] [6] |

| Risk of Bias Tools | Validated instruments to assess methodological quality of studies. | SYRCLE's RoB tool, Cochrane RoB tool, OHAT/IRIS/GRADE methods [5] |

| Data Synthesis Software | Conducts statistical meta-analysis and generates forest plots. | RevMan [3], R packages (metafor, meta) |

| Reporting Guidelines | Checklists to ensure complete and transparent reporting of the review. | PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) [3] |

| Zofenoprilat | Zofenoprilat, CAS:75176-37-3, MF:C15H19NO3S2, MW:325.5 g/mol | Chemical Reagent |

| Cyclamidomycin | Cyclamidomycin, CAS:35663-85-5, MF:C7H10N2O, MW:138.17 g/mol | Chemical Reagent |

In the high-stakes field of ecotoxicology, where research informs environmental policy and public health protection, the methodology behind evidence synthesis is critical. While traditional literature reviews have a role in providing introductory context, systematic reviews offer a transparent, rigorous, and reproducible process designed to minimize bias and produce the most reliable summary of the available evidence. By adhering to the structured protocol and utilizing the toolkit outlined in this application note, researchers can generate authoritative findings that robustly support chemical risk evaluations and evidence-based environmental decision-making [5].

The Critical Role of Systematic Reviews in Ecological Risk Assessment and Environmental Policy

Systematic reviews provide a critical summary of a body of knowledge that links research to decision making, whether to inform public health, clinical medicine, medical education, system-level changes, or advocacy [7]. In the field of ecotoxicology, which presents fundamental research on the effects of toxic chemicals on populations, communities and terrestrial, freshwater and marine ecosystems [8], systematic reviews serve as an objective source of evidence to inform environmental policy. Good reviews are accessed by a wide range of audiences, including health service users, health service providers, and policy decision makers [7]. The growing awareness regarding the dangers posed by emerging contaminants (ECs) to terrestrial ecosystems and human health underscores the need for rigorous evidence synthesis through systematic review methodologies [9].

Table 1: Bibliometric Analysis of Ecological Risk Assessment Research (2005-2024)

| Analysis Category | Key Findings | Data Source |

|---|---|---|

| Publication Growth | 26.26% annual growth in publications | Web of Science Database [9] |

| Leading Countries | China, USA, and Italy as leading contributors | Citation Analysis [9] |

| Citation Impact | Switzerland exhibited highest citation impact per article | VOSviewer and CiteSpace [9] |

| Key Institutions | Chinese Academy of Sciences, CSIC (Spain), King Saud University | Institutional Analysis [9] |

| Influential Journals | Environmental Toxicology and Chemistry, Journal of Environmental Monitoring | Journal Analysis [9] |

Application Note: Framework for Ecological Systematic Reviews

Protocol Development and Research Question Formulation

A systematic literature review is considered the gold standard of evidence-based research since it's one of the most reliable, and objective sources of evidence [6]. It uses explicit, unbiased, and well-documented methods to select, assess, and summarize all the relevant literature related to a specific topic [6]. The process begins with checking for existing reviews and protocols to determine if the review is still needed and whether the question should be altered to address gaps in current knowledge [10].

When developing research questions for ecological risk assessment, authors should consult experts, review research protocols, and employ literature review software to help automatically source, select, and qualify relevant data [6]. Information collected during preliminary literature review can help in structuring how the findings will be presented. The most effective systematic reviews in ecotoxicology formulate clear, well-defined research questions of appropriate scope using established frameworks to define question boundaries [10].

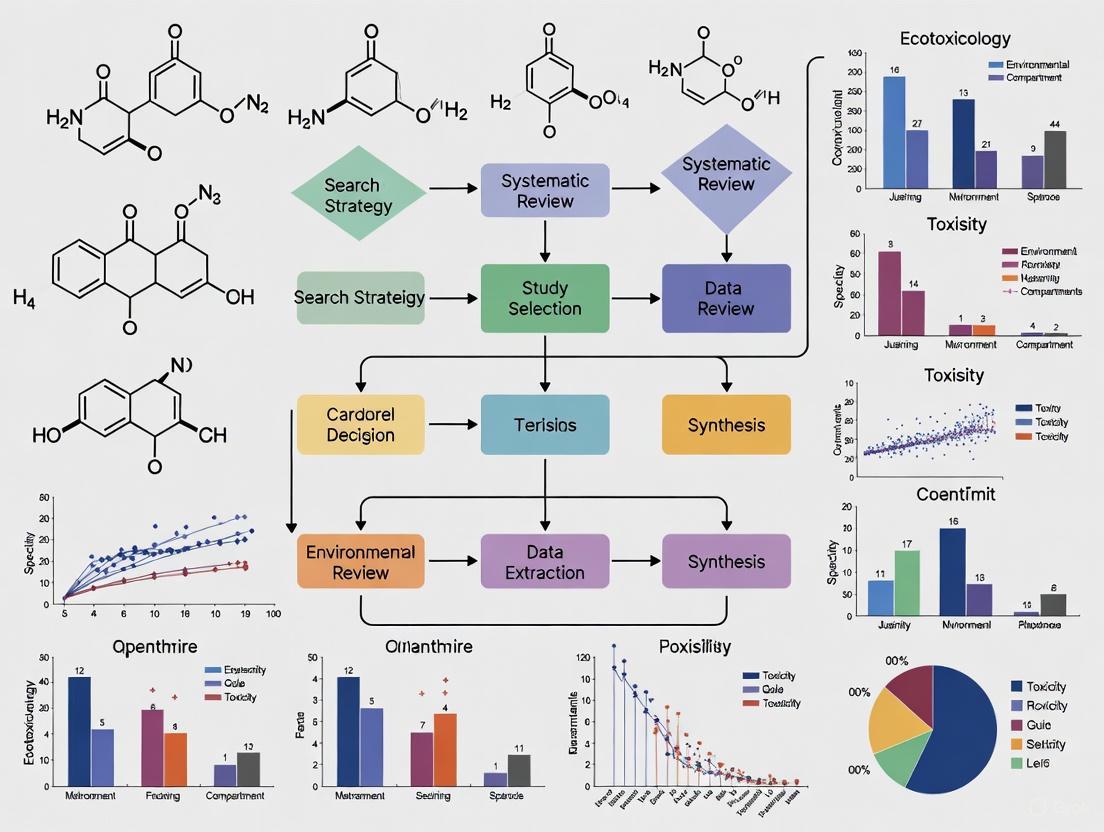

Figure 1: Systematic Review Workflow for Ecotoxicology

Search Strategy and Study Selection

Running searches in databases identified as relevant to the topic requires working with information specialists to design comprehensive search strategies across a variety of databases [10]. The process involves approaching the gray literature methodically and purposefully [10]. All retrieved records from each search should be collected into a reference manager, such as Endnote, and de-duplicated prior to screening [10].

For ecological risk assessment, studies should be selected for inclusion based on pre-defined criteria, starting with title/abstract screening to remove studies that are clearly not related to the topic [10]. The inclusion/exclusion criteria are then used to screen the full-text of studies [10]. It is highly recommended that two independent reviewers screen all studies, resolving areas of disagreement by consensus [10]. This rigorous approach ensures the objectivity and comprehensiveness required for environmental policy decisions.

Experimental Protocol: Conducting Systematic Reviews in Ecotoxicology

Data Extraction and Management

Data extraction involves using a spreadsheet or systematic review software to extract all relevant data from each included study [10]. It is recommended to pilot the data extraction tool to determine if other fields should be included or existing fields clarified [10]. For ecological risk assessment, this typically includes information about the population and setting addressed by the available evidence, comparisons addressed in the review, including all interventions, and a list of the most important outcomes, whether desirable or undesirable [6].

Table 2: Essential Research Reagents for Ecological Systematic Reviews

| Research Reagent | Function/Application | Specifications |

|---|---|---|

| Literature Databases | Source identification and retrieval | Web of Science, AGRICOLA, BIOSIS [8] |

| Reference Manager | Collection and de-duplication of records | Endnote, DistillerSR [10] |

| Quality Assessment Tool | Evaluate risk of bias in included studies | Cochrane RoB Tool [10] |

| Data Extraction Form | Systematic capture of relevant study data | Spreadsheet or systematic review software [10] |

| Analysis Software | Quantitative and qualitative synthesis | VOSviewer, CiteSpace [9] |

Risk of Bias Assessment and Evidence Quality

Evaluating the risk of bias of included studies involves using a Risk of Bias tool (such as the Cochrane RoB Tool) to assess the potential biases of studies in regards to study design and other factors [10]. Reviewers can adapt existing tools to best meet the needs of their review, depending on the types of studies included [10]. For ecotoxicology reviews, this assessment is particularly important given the diverse methodologies employed in environmental research.

The summary of findings table includes a grade of the quality of evidence; i.e., a rating of its certainty [6]. This structured tabular format presents the primary findings of a review, particularly information related to the quality of evidence, the magnitude of the effects of the studied interventions, and the aggregate of available data on the main outcomes [6]. Most systematic reviews are expected to have one summary of findings table, but some studies may have multiple tables if the review addresses more than one comparison, or deals with substantially different populations that require separate tables [6].

Figure 2: Ecotoxicology Evidence Pathway

Application Note: Evidence Synthesis and Visualization

Quantitative and Qualitative Synthesis

Presenting results involves clearly presenting findings, including detailed methodology (such as search strategies used, selection criteria, etc.) such that the review can be easily updated in the future with new research findings [10]. A meta-analysis may be performed if the studies allow [10]. For ecological risk assessment, this synthesis provides recommendations for practice and policy-making if sufficient, high-quality evidence exists, or future directions for research to fill existing gaps in knowledge or to strengthen the body of evidence [10].

Diagrams can play an important role in communicating the review to the reader [7]. Indeed, graphic design is increasingly important for researchers to communicate their work to each other and the wider world [7]. Visualizing the topic under study facilitates discussion, helps understanding by making complexity more accessible, provokes deeper thinking, and makes concepts more memorable [7]. Higher impact scientific articles tend to include more diagrams, possibly because diagrams improve clarity and thereby lead to more citations or because high-impact articles tend to include novel, complex ideas that require visual explanation [7].

Diagrammatic Representation in Systematic Reviews

Diagrams include "logic models," "framework models," or "conceptual models"—terms that are often used interchangeably and inconsistently in the literature [7]. Effective diagrams in systematic reviews serve three primary purposes: illustrating the context and baseline understanding, clarifying the review question and scope, and presenting the results [7]. Almost all of them comprise boxes and arrows to indicate causal relationships, which aligns with systematic reviews generating or testing theories about causal relationships [7].

For meta-analyses, pathway diagrams may be overlaid with quantitative results [7]. For qualitative syntheses, diagrams arrange findings into an image of the emerging theory, offering explanations or relationships between or among observations [7]. Diagrams sometimes combine quantitative and qualitative results from paired or mixed studies to generate an integrated understanding [7]. This approach is particularly valuable in ecological risk assessment where both quantitative exposure data and qualitative ecosystem impact observations must be integrated.

Protocol for Evidence to Policy Translation

The summary of findings table presents the main findings of a review in a transparent, understandable, and simple format [6]. It includes multiple pieces of data derived from both quantitative and qualitative data analysis in systematic reviews [6]. These include information about the main outcomes, the type and number of studies included, the estimates (both relative and absolute) of the effect or association, and important comments about the review, all written in a plain-language summary so that it's easily interpreted [6].

Systematic reviews in ecotoxicology have significant implications for environmental policy, management strategies, and mitigation measures to protect ecosystem and human health [9]. The findings from these reviews help identify key trends, research hotspots, and gaps to provide policy recommendations, inform regulatory frameworks, and suggest future research directions for the sustainable management of emerging contaminants in terrestrial environments [9]. Understanding broader ecological impacts, including ecosystem responses and bioaccumulation, is crucial for informed environmental management and policy-making [9].

Table 3: Temporal Trends in Ecological Risk Assessment Research (2005-2024)

| Time Period | Research Focus | Key Contaminants | Assessment Methods |

|---|---|---|---|

| 2005-2010 | Single contaminant effects | Heavy metals, pesticides | Traditional toxicological assessment |

| 2011-2016 | Mixture toxicity | Pharmaceuticals, endocrine disruptors | Combined risk assessment models |

| 2017-2024 | Ecosystem-scale impacts | Microplastics, emerging contaminants | Ecological network analysis [9] |

Advanced Visualization for Policy Communication

Creating effective diagrams for systematic reviews involves several key steps: choosing the purpose of the diagram before starting to assemble it; identifying the key information to be communicated; working as a team to capture and share understanding from various perspectives; and starting simply and expecting at least a few iterations [7]. Additional considerations include giving the diagram a clear starting point to help readers navigate the diagram more easily; using visual conventions such as reading from left to right, top to bottom, or both to offer a clear flow of ideas; and limiting the number of arrows to guide the readers' gaze [7].

For policy communication, diagrams should use plain language and fewer words without a long legend, key, or acronyms so that the diagram can be understood intuitively [7]. Related information should be grouped in columns or rows with headings, colors, or shapes to draw attention to key parts, such as activities or outcomes [7]. These features should be used selectively to avoid obscuring key relationships with too many layers [7]. The development process should include seeking feedback from others, including peers and the intended audience, while the diagram is developing [7].

Systematic reviews in ecotoxicology require clearly framed research questions to define objectives, delineate approach, and guide the entire review process [11]. The PECO framework (Population, Exposure, Comparator, Outcome) has emerged as the standard for formulating these questions, adapting the well-established PICO (Population, Intervention, Comparator, Outcome) framework used in healthcare research to better suit the unique needs of environmental health sciences [11] [12]. While the Cochrane Handbook, a recognized reference for systematic reviews, does not specifically address the development of questions for reviews of exposures, organizations like the Collaboration for Environmental Evidence, the Navigation Guide, and the U.S. Environmental Protection Agency's (EPA) Integrated Risk Information System (IRIS) have all emphasized the role of the PECO question to guide the systematic review process for questions about exposures [11].

A well-constructed PECO question defines the review's objectives and informs the study design, inclusion/exclusion criteria, and the interpretation of findings [11]. In ecotoxicology, which studies how toxic chemicals interact with organisms in the environment, this framework provides the necessary structure to investigate the effects of environmental contaminants on diverse species and ecosystems [13]. The fundamental challenge in environmental, public, and occupational health research lies in properly identifying the exposure and comparator within the PECO, which differs significantly from formulating questions about intentional interventions in the PICO framework [11].

Defining the PECO Components

Core Elements and Definitions

Each component of the PECO framework serves a distinct purpose in structuring an ecotoxicological research question.

Population (P): This refers to the organisms, ecosystems, or environmental compartments of interest. Ecotoxicology encompasses an enormous biodiversity, including marine and freshwater organisms, terrestrial species from invertebrates to vertebrates, plants, fungi, and microbial communities [13]. The population must be clearly specified, whether it is a specific model species (e.g., Daphnia magna, zebrafish), a functional group (e.g., soil decomposers), or a defined ecosystem (e.g., a freshwater lake sediment community) [13] [14].

Exposure (E): This defines the chemical, contaminant, or stressor under investigation and its characteristics. This can include classic contaminants (e.g., pesticides, metals, persistent organic pollutants), emerging contaminants (e.g., nanomaterials, pharmaceuticals, microplastics), or complex mixtures [14] [15]. The exposure definition should consider aspects such as the route of exposure (e.g., dietary, waterborne, sediment), duration (acute vs. chronic), and chemical speciation or bioavailability where relevant [13].

Comparator (C): This defines the reference scenario against which the exposure is evaluated. This is a particularly challenging component in exposure science. The comparator can be an unexposed control group, a group exposed to background levels of the contaminant, a group exposed to a different level or range of the same contaminant, or an alternative chemical or stressor [11]. The choice of comparator is critical for interpreting the directness and real-world relevance of the findings.

Outcome (O): This specifies the measurable effects or endpoints used to assess the impact of the exposure. In ecotoxicology, common endpoints include survival (lethal effects), reproduction, growth, development, behavior, biochemical biomarkers, genetic toxicity, and population- or community-level changes [16] [13]. Endpoints are often categorized as sublethal or lethal, with sublethal endpoints increasingly used as more sensitive indicators of toxicity [16].

Ecotoxicology-Specific Considerations for PECO

Applying PECO in ecotoxicology requires special attention to several factors beyond the basic definitions:

Environmental Realism and Lab-to-Field Extrapolation: A key challenge is translating results from controlled laboratory studies to complex field environments. The PECO question should be framed with consideration for environmental fate and behavior of the chemical, including its persistence, bioaccumulation potential, and transformations in the environment [13] [14].

Trophic Levels and Ecosystem Complexity: Ecotoxicological risk assessment often requires data from species representing different trophic levels (e.g., primary producers, primary consumers, predators) [13]. A PECO question may need to address multiple populations simultaneously or separately to provide a comprehensive hazard assessment.

Multiple Stressors: Organisms in the environment are seldom exposed to a single contaminant in isolation. While PECO typically focuses on a primary exposure, the framework can be adapted to investigate mixtures or interactive effects of multiple stressors [15].

Application Notes: PECO in Practice

Five Paradigmatic Scenarios for PECO Questions

The context of the research and what is already known about the exposure-outcome relationship will dictate how a PECO question is phrased [11]. The following scenarios, adapted for ecotoxicology, provide a framework for formulating questions.

Table 1: PECO Scenarios in Ecotoxicology Systematic Reviews

| Scenario & Context | Approach | Ecotoxicology PECO Example |

|---|---|---|

| 1. Exploring an association or dose-response relationship | Explore the shape and distribution of the relationship between exposure and outcome across a range of exposures. | Among freshwater amphipods (Hyalella azteca), what is the effect of a 1 µg/L incremental increase in sediment-bound pyrethroid pesticides on mortality? [11] |

| 2. Evaluating effects using data-driven exposure cut-offs | Use cut-offs (e.g., tertiles, quartiles) defined based on the distribution of exposures reported in the literature. | In soil nematodes, what is the effect of the highest quartile of microplastic concentration in soil compared to the lowest quartile on reproductive capacity? [11] |

| 3. Evaluating effects using externally defined cut-offs | Use exposure cut-offs identified from or known from other populations, regulations, or preliminary research. | Among avian insectivores, what is the effect of dietary exposure to EPA chronic toxicity reference values for organophosphates compared to background exposure on fledgling success? [11] |

| 4. Identifying a risk-based exposure threshold | Use existing exposure cut-offs associated with known adverse outcomes of regulatory or biological relevance. | Among aquatic algae, what is the effect of exposure to copper concentrations below the EPA Ambient Water Quality Criterion (< 3.1 µg/L) compared to concentrations at or above it on growth inhibition? [11] |

| 5. Evaluating an intervention to reduce exposure | Select the comparator based on the exposure reduction achievable through a specific intervention or mitigation strategy. | In agricultural streams, what is the effect of implementing riparian buffer zones compared to no buffers on the toxicity of insecticide runoff to benthic macroinvertebrates? [11] |

Quantifying the Exposure and Defining the Comparator

A critical step in implementing Scenarios 2-5 is the quantification of the exposure, often referred to as defining a "cut-off" value [11]. In this context, a cut-off broadly refers to thresholds, levels, durations, means, medians, or ranges of exposure. Sources for defining these values can include:

- Regulatory thresholds from agencies like the EPA or OSHA [11].

- Values reported in the published literature from previous primary research or systematic reviews.

- Current legislation or environmental quality standards.

- A level considered to produce a minimally important change in the outcome, based on biological or ecological significance [11].

Experimental Protocols and Data Analysis

Standardized Ecotoxicity Testing Protocols

Data for systematic reviews in ecotoxicology are generated through standardized test guidelines. The following table summarizes key methods and their applications.

Table 2: Standardized Ecotoxicity Test Methods and Data Analysis

| Test Organism / System | Commonly Assessed Endpoints (Outcomes) | Standardized Protocol (e.g., OECD, EPA, ISO) | Recommended Statistical Analysis |

|---|---|---|---|

| Freshwater Algae (e.g., Pseudokirchneriella subcapitata) | Growth rate inhibition, biomass yield | OECD 201, EPA 1003.0 | Regression analysis to calculate EC50 (concentration causing 50% effect) or NOEC/LOEC via ANOVA [16] |

| Freshwater Crustaceans (e.g., Daphnia magna) | Immobilization (acute), reproduction, growth (chronic) | OECD 202, OECD 211, EPA 1002.0 | Logistic regression for LC50/EC50; ANOVA for reproduction/growth data [16] |

| Fish (e.g., zebrafish, fathead minnow) | Mortality (acute), growth, reproduction, embryonic development | OECD 203, OECD 210, OECD 236 (FET) | Probit or logit analysis for LC50; ANOVA for sublethal endpoints [16] |

| Earthworms (e.g., Eisenia fetida) | Mortality, reproduction, biomass change | OECD 207, OECD 222 | ANOVA for comparison to control; regression for dose-response [13] |

| Sediment-Dwelling Organisms (e.g., Chironomus riparius) | Survival, growth, emergence | OECD 218, OECD 219, EPA 100.1 | ANOVA to compare responses across sediments; possible regression if concentration gradient is established [13] |

Statistical Analysis Workflow

The type of statistical analysis depends on the nature of the data (quantitative, quantal/binary, count) and the study design [16]. The general workflow for analyzing data from a standard dose-response ecotoxicity test is outlined below.

The Scientist's Toolkit: Essential Reagents and Materials

Table 3: Key Research Reagent Solutions in Ecotoxicology

| Reagent / Material | Function and Application in Ecotoxicity Testing |

|---|---|

| Reconstituted Test Water | A standardized synthetic water medium with defined hardness, pH, and alkalosity; ensures reproducibility in aquatic tests by providing a consistent exposure matrix [16]. |

| Control Sediment/Solis | Reference sediments or soils with known properties (e.g., particle size, organic carbon content); used as a negative control and dilution series matrix for sediment/terrestrial tests [13]. |

| Reference Toxicants | Standard, well-characterized chemicals (e.g., potassium dichromate, sodium chloride); used to assess the health and sensitivity of test organisms, ensuring quality control [16]. |

| Algal Culture Medium | A nutrient solution providing essential elements (N, P, trace metals) for culturing and testing algal species according to standardized guidelines [16]. |

| Eluent/Extraction Solvents | High-purity organic solvents (e.g., acetone, hexane, methanol); used to prepare stock solutions of test chemicals and for analytical verification of exposure concentrations [16] [15]. |

| Forsythoside F | Forsythoside F, CAS:94130-58-2, MF:C34H44O19, MW:756.7 g/mol |

| Viomellein | Viomellein|Antibacterial Mycotoxin|For Research |

Emerging Methods and Integration with AOPs

New Approach Methods (NAMs) and Computational Tools

The field is rapidly evolving with New Approach Methods (NAMs) that can provide data for systematic reviews [17]. These include:

- Omics Technologies: Genomics, transcriptomics, proteomics, and metabolomics provide insights into molecular mechanisms and enable the discovery of sensitive biomarkers of exposure and effect [18].

- In Vitro Testing: Cell-based assays and high-throughput screening (HTS) offer rapid, mechanistic data while reducing reliance on whole-organism tests [18] [19]. Programs like ToxCast and Tox21 have generated millions of data points for thousands of chemicals [19].

- In Silico Methods: Quantitative Structure-Activity Relationships (QSARs) and machine learning models predict toxicity based on chemical structure, useful for prioritizing chemicals for testing or filling data gaps [18] [19].

The Adverse Outcome Pathway (AOP) Framework

The AOP framework provides a structured way to organize evidence linking a molecular initiating event (MIE) to an adverse outcome (AO) at the organism or population level across a series of key events [19]. This conceptual model is highly valuable for structuring PECO questions around mechanistic pathways.

Systematic reviews in ecotoxicology can use the AOP framework to synthesize evidence supporting or refuting key event relationships, thereby strengthening the biological plausibility in a causal assessment [19]. PECO questions can be formulated for each key event in the pathway, creating a comprehensive and mechanistically informed evidence base for environmental risk assessment.

Application Note: Advanced Analytical Techniques for Contaminants of Emerging Concern (CECs)

Background and Principle

The identification of unknown chemical drivers of toxicity in complex environmental samples remains a significant challenge in ecotoxicology. Effect-Directed Analysis (EDA) integrates separation, biotesting, and chemical analysis to isolate and identify causative toxicants [20]. When coupled with Non-Targeted Analysis (NTA) using High-Resolution Mass Spectrometry (HRMS), this approach provides a powerful tool for identifying previously unrecognized Contaminants of Emerging Concern (CECs) [20] [21]. CECs include a broad category of pollutants, such as pharmaceuticals, endocrine-disrupting compounds, and microplastics, whose presence and impacts in the environment are still being fully understood [21]. The core principle is to fractionate a sample and use bioassays to pinpoint fractions with biological activity, subsequently employing HRMS to identify the specific compounds within those active fractions.

Key Quantitative Findings from Systematic Reviews

A systematic quantitative literature review of 95 studies reveals the comparative effectiveness of different analytical approaches in explaining observed sample toxicity. The following table summarizes the key findings, which are critical for designing systematic reviews and prioritizing methodologies [20].

Table 1: Explained Toxicity from Different Analytical Approaches in Ecotoxicological Studies

| Analytical Approach | Description | Median Percentage of Explained Toxicity | Number of Studies (Out of 95) Where Toxicity Was Largely Explained (>75%) |

|---|---|---|---|

| TOXtarget | Analysis focused only on pre-selected target compounds | 13% | Not Specified |

| TOXnon-target | Analysis using non-targeted methods to identify unknowns | 47% | 8 Studies |

| TOXtarget+non-target | Combination of both targeted and non-targeted analysis | 34% | 4 Studies had partially explained endpoints |

Experimental Protocol: EDA-NTA Workflow

Title: Integrated Effect-Directed Analysis and Non-Targeted Analysis for Identification of Bioactive Contaminants.

Objective: To isolate, identify, and confirm the chemical constituents responsible for the toxicity of an environmental sample (e.g., wastewater effluent, surface water, or sediment extract).

Materials and Reagents:

- Solid-Phase Extraction (SPE) Cartridges: e.g., Oasis HLB, C18, for sample concentration and clean-up.

- Bioassay Reagents: Specific to the endpoint of interest (e.g., YES/YAS kits for estrogen/androgen receptor activity, reagents for aryl hydrocarbon receptor assay, or cytotoxicity assays).

- HPLC/UPLC System: With reverse-phase and/or hydrophilic interaction liquid chromatography (HILIC) columns to address analytical bias against polar compounds [20].

- High-Resolution Mass Spectrometer: e.g., Q-TOF or Orbitrap instrument.

- Fraction Collector: For automated collection of HPLC effluent.

- Solvents: HPLC-grade methanol, acetonitrile, and water.

- In silico Prediction Software: e.g., for retention time prediction and spectral simulation to aid identification [20].

Procedure:

- Sample Preparation: Concentrate the water sample (e.g., 1 L) using Solid-Phase Extraction (SPE). Elute the absorbed compounds with a strong solvent (e.g., methanol), evaporate to dryness, and reconstitute in a small volume of a compatible solvent for bioassay and chemical analysis [20].

- Fractionation: Inject an aliquot of the sample extract onto a preparative HPLC system. Using a fraction collector, collect the effluent over the entire chromatographic run time into multiple fractions (e.g., 10-20). Recombine fractions as needed to reduce the number of bioassays.

- Effect Assessment (Biotesting): Test the original sample extract and all collected fractions using a relevant bioassay battery (e.g., estrogen, androgen, and aryl hydrocarbon receptor assays) [20]. Normalize the biological responses to the original whole extract to locate the bioactive fractions.

- Non-Targeted Chemical Analysis: Analyze the active fractions using LC-HRMS. Data should be acquired in both positive and negative ionization modes to maximize compound coverage.

- Data Processing and Compound Identification: Process the raw HRMS data using software (e.g., XCMS, MS-DIAL) for peak picking, alignment, and componentization. Prioritize features present in the bioactive fractions. Use accurate mass to generate molecular formulae and search against chemical databases (e.g., PubChem). Utilize MS/MS spectral libraries and in silico fragmentation tools for structure elucidation [20].

- Confirmation: Where possible, confirm the identity and biological activity of the putative toxicant by obtaining an authentic standard and re-analyzing it under the same conditions (retention time matching, MS/MS spectrum matching) and re-testing it in the bioassay.

Application Note: Computational Methods in Predictive Ecotoxicology

Background and Principle

Computational methods, often termed in silico toxicology, offer a pathway to assess chemical hazards without animal testing, aligning with the 3Rs principle (Replacement, Reduction, Refinement) [21]. These methods are indispensable for prioritizing the risk of the vast number of existing and new chemicals for which empirical toxicity data is lacking. The foundation of these approaches is the principle that the biological activity of a chemical is a function of its molecular structure [21]. By building mathematical models based on known data, the toxicity of untested, structurally similar chemicals can be predicted.

Key Methodologies and Endpoints

Table 2: Overview of Major Computational Methods in Predictive Ecotoxicology

| Method | Core Principle | Common Application in Ecotoxicology | Key Considerations |

|---|---|---|---|

| Quantitative Structure-Activity Relationship (QSAR) | Develops a quantitative model that relates descriptors of a chemical's structure to a biological activity or property. | Predicting acute toxicity (e.g., LC50 for fish), bioaccumulation potential, and environmental fate (e.g., biodegradation) [21]. | Model domain of applicability is critical; predictions are unreliable for chemicals outside the structural space of the training set. |

| Read-Across | Infers the properties of a "target" chemical by using data from similar "source" chemicals (analogues). | Filling data gaps for regulatory submissions, particularly for categories of chemicals like polymers or UVCBs (Unknown or Variable composition, Complex reaction products, or Biological materials). | Justification for the similarity of the analogues is a key step and potential source of uncertainty. |

| Adverse Outcome Pathway (AOP) Development | Organizes existing knowledge about linked events across biological levels from a molecular initiating event to an adverse outcome at the organism or population level [21]. | Providing a mechanistic framework for interpreting in vitro and in silico data in an ecologically relevant context. Used in integrated testing strategies. | AOPs are qualitative frameworks; quantitative AOPs (qAOPs) are needed for predictive risk assessment. |

Experimental Protocol: Developing a QSAR Model for Toxicity Prediction

Title: In Silico Prediction of Acute Aquatic Toxicity using Quantitative Structure-Activity Relationship (QSAR) Modeling.

Objective: To develop and validate a QSAR model for predicting the acute toxicity (e.g., 48-hour LC50 for Daphnia magna) of new chemical entities.

Materials and Reagents:

- Chemical Dataset: A curated set of chemicals with reliable, experimental acute toxicity data (e.g., from the EPA ECOTOX database [22]).

- Chemical Structure Representation: Software to generate and handle chemical structures (e.g., SMILES strings, SDF files).

- Molecular Descriptor Calculation Software: Tools like PaDEL-Descriptor, DRAGON, or those integrated into modeling suites.

- QSAR Modeling Software: Platforms such as KNIME, Orange Data Mining, or specialized software like WEKA.

- Validation Tools: Software with capabilities for statistical validation (e.g., cross-validation, external validation).

Procedure:

- Data Collection and Curation: Compile a dataset of chemicals and their corresponding experimental toxicity values. Remove duplicates and compounds with uncertain structures or data. Divide the dataset into a training set (~70-80%) for model development and a test set (~20-30%) for external validation.

- Descriptor Calculation and Selection: For each chemical in the dataset, compute a wide range of molecular descriptors (e.g., constitutional, topological, electronic, and geometrical). Pre-process the data by removing constant and correlated descriptors. Use feature selection methods (e.g., genetic algorithms, stepwise selection) to reduce dimensionality and select the most relevant descriptors for the model.

- Model Development: Apply a statistical or machine learning algorithm (e.g., Multiple Linear Regression (MLR), Partial Least Squares (PLS), or Support Vector Machines (SVM)) to the training set to build a model that correlates the selected descriptors with the toxicity endpoint.

- Model Validation: Rigorously validate the model to assess its predictive power and reliability.

- Internal Validation: Use techniques like cross-validation (e.g., 5-fold or 10-fold) on the training set. Report metrics like Q² (cross-validated correlation coefficient).

- External Validation: Use the held-out test set to evaluate the model's performance on unseen data. Report metrics including R² (coefficient of determination), RMSE (root mean square error), and MAE (mean absolute error).

- Defining the Applicability Domain: Characterize the chemical space of the training set using methods like leverage or distance-based measures. This defines the scope within which the model can make reliable predictions.

- Prediction and Reporting: Use the validated model to predict the toxicity of new chemicals. Clearly report the prediction along with an indication of whether the chemical falls within the model's applicability domain.

Visualizing Workflows and Pathways

The following diagrams, generated using Graphviz DOT language, illustrate the core workflows and conceptual frameworks described in these application notes.

EDA-NTA Workflow for Toxicant Identification

Adverse Outcome Pathway (AOP) Conceptual Framework

QSAR Model Development Workflow

Table 3: Key Research Reagents and Databases for Ecotoxicology and Environmental Chemistry

| Category | Item/Resource | Function and Application |

|---|---|---|

| Bioassays | Yeast Estrogen Screen (YES) / Yeast Androgen Screen (YAS) | In vitro reporter gene assays used to detect compounds that activate estrogen or androgen receptors, crucial for EDA of endocrine-disrupting compounds [20]. |

| Analytical Standards | Isotope-Labeled Internal Standards | Added to samples prior to analysis via HRMS to correct for matrix effects and instrument variability, improving quantitative accuracy in NTA. |

| Chromatography | HILIC Columns | Hydrophilic Interaction Liquid Chromatography columns; used to retain and separate highly polar and ionic compounds that are often missed by standard reverse-phase methods, reducing analytical bias [20]. |

| Computational Tools | Quantitative Structure-Activity Relationship (QSAR) Software | Used to build predictive models that relate a chemical's molecular structure to its toxicological activity, filling data gaps for new chemicals [21]. |

| Data Resources | EPA ECOTOX Database | A comprehensive, publicly available database providing single chemical toxicity data for aquatic life, terrestrial plants, and wildlife [22]. Essential for model training and validation. |

| Data Resources | Health and Environmental Research Online (HERO) | A database of over 600,000 scientific references and data from peer-reviewed literature used by the U.S. EPA to support regulatory decision-making [22]. Vital for systematic reviews. |

In the field of ecotoxicology, the credibility of research is paramount for informing environmental risk assessments and regulatory decisions. The process of systematic review, which aims to comprehensively identify, evaluate, and synthesize all relevant studies on a particular question, is fundamentally dependent on the availability and transparency of primary research [23]. Pre-registration—the practice of detailing a research plan in a time-stamped, immutable registry before a study is conducted—serves as a powerful tool to enhance this transparency and combat issues like publication bias and undisclosed analytical flexibility, thereby strengthening the entire evidence base for systematic reviews [24]. This protocol outlines the importance of pre-registration and provides a detailed framework for its implementation in ecotoxicological research.

Table 1: Core Concepts in Pre-registration for Ecotoxicology

| Concept | Definition | Relevance to Ecotoxicology |

|---|---|---|

| Pre-registration | The practice of submitting a detailed research plan to a public registry before conducting a study [24]. | Creates a public record of planned vs. unplanned work, distinguishing hypothesis testing from exploration. |

| Confirmatory Research | Research that involves testing a specific, pre-defined hypothesis with the goal of minimizing false-positive findings [24]. | Essential for definitively establishing the toxicity of a chemical or the effect of an environmental stressor. |

| Exploratory Research | Research that involves looking for potential relationships, effects, or differences without a single, pre-specified test; it is hypothesis-generating [24]. | Crucial for discovering unexpected toxicological effects or interactions, such as hormesis [25]. |

| Transparent Changes | The documented and justified disclosure of any deviations from the pre-registered plan that occur during the research process [24]. | Maintains the credibility of a study when practical constraints or unforeseen issues necessitate protocol changes. |

The Rationale: Why Pre-registration is Critical for Ecotoxicology

Pre-registration future-proofs research by clearly distinguishing planned, confirmatory analyses from unplanned, exploratory analyses. This distinction is critical for maintaining the diagnostic value of statistical inferences, such as p-values [24]. In ecotoxicology, where studies often involve multiple endpoints and complex statistical models (e.g., probit regression for binary mortality data or ANOVA for growth comparisons), the risk of data-dependent decisions inflating false-positive rates is significant [25]. Pre-registration mitigates this risk by locking in the analytical plan prior to data collection. Furthermore, it addresses publication bias by ensuring that studies with null results are part of the scientific record, as the pre-registration timestamp stakes a claim to the research idea independent of the eventual outcome [24]. This provides a more complete and less biased body of literature for systematic reviews, such as those curating data for the ECOTOXicology Knowledgebase, to synthesize [23].

Application Notes and Protocols for Pre-registration

Pre-registration Workflow and Decision Protocol

The following diagram outlines the key stages and decision points in the pre-registration process for an ecotoxicology study.

Detailed Experimental Methodology for a Pre-registered Ecotoxicity Test

This protocol uses a standard acute lethality test with a sub-sampling design for confirmation as an example.

Title: Pre-registered Protocol for Determining LC~50~ in Daphnia magna with Exploratory and Confirmatory Phases.

Objective: To confirmatively determine the 48-hour median lethal concentration (LC~50~) of a test chemical in Daphnia magna.

Hypothesis: The LC~50~ of the test chemical for Daphnia magna is between X and Y mg/L.

1. Test Organisms and Acclimation:

- Organism: Daphnia magna, neonates (<24 hours old).

- Source: In-house culture or certified supplier.

- Acclimation: Acclimate to test conditions (20°C ± 1, 16:8 light:dark cycle) for a minimum of 48 hours prior to testing.

- Handling: Organisms shall not be fed during the acclimation period 2 hours before the test and for the test duration.

2. Experimental Design:

- Test Type: Static non-renewal.

- Concentrations: A minimum of five test concentrations and a negative control (reconstituted water per standard guidelines [25]).

- Replicates: Four replicates per concentration.

- Organisms per Replicate: Five neonates, randomly assigned.

- Total Organisms: 120 neonates (5 conc. + 1 control) * 4 replicates * 5 organisms = 120.

- Randomization: The assignment of test beakers to positions in the environmental chamber will be fully randomized. A random number generator will be used to create the layout.

3. Data Collection:

- Endpoint: Mortality (immobility) at 48 hours. An organism is considered immobile if no movement is observed within 15 seconds after gentle agitation.

- Blinding: The personnel scoring mortality will be blinded to the treatment groups. A second researcher will code the test beakers with non-revealing identifiers.

4. Confirmatory Statistical Analysis Plan:

- Primary Model: LC~50~ and its 95% confidence interval will be determined using probit regression [25].

- Software: Analysis will be conducted in R using the

drcpackage [25]. - Model Fit: Goodness-of-fit will be assessed via a Pearson chi-square test.

- Acceptance Criteria: The test is considered valid if control mortality is ≤10%.

5. Exploratory Analysis Plan:

- The raw data will be fitted to alternative models (e.g., logit regression) and the model with the best fit (assessed by Akaike Information Criterion) will be reported separately as an exploratory finding.

- Data from the 24-hour time point will be analyzed and reported as exploratory.

6. Split-Sample Validation (Optional):

- If the researcher is in an exploratory phase, the total number of organisms will be doubled (N=240). The data will be randomly split into a "training" set (N=120) for model exploration and a "validation" set (N=120) for confirmatory testing of the identified model [24].

Table 2: Key Research Reagent Solutions for Ecotoxicology

| Item | Function/Explanation | Example in Protocol |

|---|---|---|

| Test Organisms | Standardized, sensitive species used as bioindicators for toxic effects. | Daphnia magna (water flea) or other model species [23]. |

| Control Water | A standardized, uncontaminated water medium that serves as a baseline for comparing toxic effects. | Reconstituted water per EPA or OECD guidelines [25]. |

| Probit/Logit Model | Statistical models suitable for analyzing binary (e.g., dead/alive) dose-response data to calculate LC~50~/EC~50~ [25]. | Used in the confirmatory analysis to determine the median lethal concentration. |

| ECOTOX Knowledgebase | A curated database of ecotoxicity tests used to inform test design and place results in the context of existing literature [23]. | Consulted during the pre-registration planning phase to identify relevant test concentrations and methodologies. |

| Benchmark Dose Software | Specialized software (e.g., US EPA BMDS) for conducting dose-response modeling and determining benchmark doses [25]. | An alternative tool for performing the primary statistical analysis. |

R with drc package |

A statistical programming environment and a specific package for analyzing dose-response curves [25]. | The planned software for executing the confirmatory probit regression analysis. |

Implementing Transparency in Practice

Navigating Changes and Exploratory Analysis

A pre-registration is a plan, not an unchangeable straitjacket. It is expected that deviations may occur due to unforeseen circumstances. The key is to handle these changes transparently [24].

- Creating a Transparent Changes Document: When writing up the results, researchers must create a "Transparent Changes" document that is uploaded alongside the final manuscript. This document should:

- List every deviation from the pre-registered plan.

- Provide a clear and justified reason for each change.

- Distinguish between changes made before any data analysis (e.g., a different dilution factor was needed) and those made after seeing the data.

- Reporting Exploratory Analyses: All unplanned, exploratory analyses must be clearly identified as such in the manuscript (e.g., in a separate section titled "Exploratory Analyses"). This ensures readers can distinguish between hypothesis-testing and hypothesis-generating results, understanding that p-values from exploratory analyses are less diagnostic [24].

Data and Workflow Transparency

Clear visual communication is a critical component of research transparency. However, many scientific figures, particularly those using arrow symbols, are ambiguous and can be misinterpreted by learners and researchers [26]. The following diagram provides a standardized visual model for the central dogma, using arrows with clearly defined meanings to avoid confusion.

Adhering to visualization guidelines that ensure sufficient color contrast between elements (like arrows or text) and their background is also essential for accessibility and clear communication [27]. This practice ensures that figures are interpretable by all readers and in various publication formats.

Executing Your Review: A Step-by-Step Methodology for Ecotoxicology Research

A well-defined research question is the critical first step in conducting a rigorous systematic review in ecotoxicology. It establishes the review's scope, guides the search strategy, and determines the eligibility criteria for including primary studies. The use of structured frameworks ensures that all key components of the ecotoxicological inquiry are comprehensively addressed. The PICO (Population, Intervention, Comparator, Outcome) and its ecotoxicology-specific adaptation, PECO (Population, Exposure, Comparator, Outcome), provide a standardized methodology for formulating precise and answerable research questions [28]. Applying these frameworks systematically helps researchers avoid ambiguity, enhances the reproducibility of the review process, and ensures that the synthesized evidence directly addresses the intended research or risk assessment objective [29] [30].

Within ecotoxicology, these structured frameworks are indispensable for organizing the vast and complex body of literature on chemical effects on organisms and ecosystems. For example, a systematic review protocol investigating the effects of chemicals on tropical reef-building corals explicitly defined its PICO elements to create clear boundaries for the evidence synthesis [30]. Similarly, the U.S. EPA's ECOTOX Knowledgebase employs a PECO statement to screen and include relevant toxicity studies with high specificity [31]. This precise framing is essential for generating reliable toxicity thresholds, such as No Observed Effect Concentrations (NOEC) and median lethal concentrations (LC50), which form the basis for ecological risk assessments and regulatory standards [30] [31].

Core Components of the Ecotoxicological Question

The following table details the core components of the PICO/PECO framework, with specific definitions and examples tailored to ecotoxicological systematic reviews.

Table 1: Core Components of the PICO/PECO Framework in Ecotoxicology

| Component | Description | Ecotoxicology-Specific Considerations | Examples from the Literature |

|---|---|---|---|

| Population (P) | The organisms or ecological systems under investigation. | Must be taxonomically verifiable and ecologically relevant. Can include whole organisms, specific life stages (e.g., larvae, adults), or even in vitro systems (e.g., cells, tissues). [31] | All tropical reef-building coral species (e.g., hermatypic scleractinians, Millepora). This includes all developmental stages and associated symbionts. [30] |

| Intervention/Exposure (I/E) | The chemical stressor or environmental contaminant of interest. | A single, verifiable chemical toxicant with a known exposure concentration, duration, and route of administration (e.g., water, soil, diet). [31] | All geogenic (e.g., trace metals) and synthetic chemicals (e.g., herbicide Diuron) with known exposure concentrations. Nutrients (e.g., nitrate) may be excluded depending on the review's focus. [30] |

| Comparator (C) | The reference condition against which the exposure is evaluated. | Typically, a control group that is not exposed to the chemical of interest or is exposed to background environmental levels. This establishes a baseline for measuring effect. [31] | A population not exposed to the chemicals, or a population prior to chemical exposure. [30] |

| Outcome (O) | The measured biological or ecological endpoints indicating an effect. | encompasses a wide range of endpoints from molecular to population levels. Common outcomes include mortality, growth reduction, reproductive impairment, biochemical changes, and bioaccumulation. [31] | All outcomes related to health status, from molecular (gene expression) to colony-level (photosynthesis, bleaching) and population-level (mortality rate). [30] |

The PECO framework used by the ECOTOX Knowledgebase further refines these components with strict eligibility criteria for data inclusion. Its "Effect" outcome is broad, capturing records related to mortality, growth, reproduction, physiology, behavior, biochemistry, genetics, and population-level effects [31].

Experimental Protocols for Ecotoxicology Studies

The primary evidence for ecotoxicological systematic reviews originates from standardized toxicity tests. The following protocol outlines the general methodology for a typical acute toxicity test, which measures the effects of short-term chemical exposure.

Protocol 1: Standard Acute Toxicity Test for Aquatic Organisms

1. Objective: To determine the acute effects of a chemical on a defined aquatic population, typically resulting in the calculation of a median lethal concentration (LC50) or median effective concentration (EC50).

2. Materials and Reagents

- Test Chemical: Pure compound of known identity and purity (e.g., CASRN verified).

- Test Organisms: A defined number of individuals of a target species (e.g., Daphnia magna, fathead minnow), of a specific age and health status, acclimated to laboratory conditions.

- Test Chambers: Glass or chemically inert containers of appropriate volume (e.g., beakers, aquaria).

- Dilution Water: A standardized, clean water medium of known hardness, pH, and alkalinity.

- Aeration System: To maintain dissolved oxygen levels.

- Environmental Control System: Equipment to maintain constant temperature, and a controlled light-dark cycle.

3. Procedure 1. Test Solution Preparation: Prepare a logarithmic series of at least five concentrations of the test chemical via serial dilution in the dilution water. Include a control treatment (zero concentration of the test chemical). 2. Randomization and Allocation: Randomly assign test organisms to each test chamber, ensuring each concentration and the control is replicated (typically 3-4 replicates). 3. Exposure: Gently introduce the organisms into their respective test chambers. The test duration is typically 24, 48, or 96 hours, depending on the species and standard guidelines. 4. Maintenance: Do not feed the organisms during acute tests. Maintain constant environmental conditions (temperature, light). Aerate solutions if needed without causing excessive loss of the chemical. 5. Monitoring: Monitor and record water quality parameters (temperature, pH, dissolved oxygen) daily in at least one replicate per treatment. 6. Data Collection: At the end of the exposure period, record the number of dead or affected organisms in each chamber. The endpoint for mortality is typically the lack of movement after gentle prodding.

4. Data Analysis * Calculate the percentage mortality or effect in each test concentration. * Correct for mortality in the control group using Abbott's formula if necessary. * Use statistical probit or logistic regression analysis to calculate the LC50/EC50 value and its 95% confidence intervals.

The methodology for chronic tests is similar but of longer duration (e.g., 7 to 28 days), often involves renewal of test solutions, and includes feeding. The outcomes measured are sublethal, such as reproduction output or growth rate, from which endpoints like the No Observed Effect Concentration (NOEC) and Lowest Observed Effect Concentration (LOEC) are derived [30] [31].

Diagram 1: Acute toxicity test workflow.

The Scientist's Toolkit: Essential Reagents and Materials

Table 2: Key Research Reagent Solutions and Materials in Ecotoxicology

| Item | Function/Description | Application Example |

|---|---|---|

| Standard Test Organisms | Well-characterized species with known sensitivity and standardized culturing methods. Used as biological indicators of chemical toxicity. | Aquatic tests: Fathead minnow (Pimephales promelas), Water flea (Daphnia magna). Terrestrial tests: Earthworm (Eisenia fetida). [31] |

| Reference Toxicants | Standard, pure chemicals of known high toxicity (e.g., potassium dichromate, sodium chloride). Used to validate the health and sensitivity of test organisms. | Routinely tested to ensure the bioassay system is responding within an expected range, verifying test validity. [31] |

| Reconstituted Dilution Water | A synthetic laboratory water prepared with specific salts to achieve a standardized hardness, pH, and alkalinity. | Provides a consistent and uncontaminated medium for preparing test solutions in aquatic toxicity tests, minimizing confounding variables. [31] |

| ASTM/OECD Test Guidelines | Standardized procedural documents detailing approved methods for conducting toxicity tests. | Examples: USEPA Series 850, OECD Series on Testing and Assessment. Ensure tests are conducted consistently and results are comparable across studies. [31] |

| Torularhodin | Torularhodin, CAS:514-92-1, MF:C40H52O2, MW:564.8 g/mol | Chemical Reagent |

| Multiflorin A | Multiflorin A, MF:C29H32O16, MW:636.6 g/mol | Chemical Reagent |

Application in Evidence Synthesis and Risk Assessment

The rigorous application of PECO during study screening and data extraction directly feeds into the quantitative synthesis of toxicity data. The extracted toxicity values (e.g., LC50, NOEC) from multiple studies for a given chemical and species group can be statistically analyzed to derive species sensitivity distributions (SSDs). These SSDs are fundamental for determining predictive toxicity reference values (TRVs) and Aquatic Life Criteria, which are used by regulatory bodies like the U.S. EPA to set protective environmental quality guidelines [31]. The entire process, from the initial PECO question to the final risk assessment value, is visualized in the following workflow.

Diagram 2: PECO to risk assessment workflow.

Systematic reviews in ecotoxicology aim to synthesize all available evidence to answer specific research questions regarding the effects of chemical contaminants on biological systems. A fundamental principle of a high-quality systematic review is the comprehensive and unbiased search for relevant studies. This necessitates a strategic approach to navigating multiple bibliographic databases and grey literature sources, while actively mitigating biases, including language bias, which can skew results by overlooking non-English publications. This protocol provides detailed methodologies for constructing and executing a systematic search strategy tailored to the field of ecotoxicology.

Developing the Systematic Search Plan

A pre-defined, transparent search plan is critical to minimize bias and ensure the review's reproducibility [32].

Core Components of a Search Strategy

A robust search strategy is built from a combination of conceptual elements and technical search terms. The table below outlines the essential components.

Table 1: Core Components of a Systematic Search Strategy

| Component | Description | Application in Ecotoxicology |

|---|---|---|

| PICOS Framework | Defines the Population, Intervention, Comparator, Outcomes, and Study types. | Population: Specific organism (e.g., Daphnia magna). Intervention: Specific contaminant (e.g., glyphosate). Comparator: Control/unexposed groups. Outcomes: Measured endpoints (e.g., mortality, reproduction). Study: Experimental lab studies, field studies. |

| Keywords | Free-text terms searched in titles and abstracts. | Include synonyms, common names, and scientific names (e.g., "Roundup" AND "glyphosate"). Use truncation (e.g., ecotox* for ecotoxicity, ecotoxicological) and wildcards (e.g., behavi*r for behavior, behaviour) [33]. |

| Controlled Vocabulary | Pre-defined subject terms (e.g., MeSH in MEDLINE, Emtree in Embase). | Identify relevant terms in each database. For example, the MeSH term "Water Pollutants, Chemical" can be exploded to include more specific terms [33]. |

| Boolean Operators | AND, OR, NOT used to combine terms. | OR: Broadens search (e.g., "freshwater fish" OR trout OR zebrafish). AND: Narrows search (e.g., microplastics AND growth). NOT: Excludes concepts (use cautiously to avoid missing relevant studies) [33]. |

A comprehensive search involves multiple information sources to capture both published and grey literature. The following workflow diagrams the recommended process, from planning to results management.

Figure 1: Workflow for Systematic Search Execution

2.2.1. Bibliographic Databases A core set of multidisciplinary and subject-specific databases should be searched. The table below lists key databases for ecotoxicology research.

Table 2: Key Information Sources for Ecotoxicology Systematic Reviews

| Source Type | Database/Resource Name | Focus & Relevance |

|---|---|---|

| Multidisciplinary Databases | Web of Science, Scopus | Core sources covering high-impact journals across sciences. Essential for comprehensive coverage. |

| Subject-Specific Databases | Environment Complete, AGRICOLA, PubMed | Cover specialized literature in environmental sciences, agriculture, and toxicology. |

| Grey Literature Databases | OpenGrey, ProQuest Dissertations & Theses | Provide access to European grey literature and academic theses, respectively. |

| Targeted Websites & Repositories | US EPA, EFSA, ECOTOX Knowledgebase, government reports | Host regulatory data, risk assessments, and technical reports not found in journals. |

2.2.2. Grey Literature Search Protocol Grey literature is crucial to minimize publication bias, as studies with null or non-significant results are less likely to be published in academic journals [33]. A systematic approach to grey literature should incorporate four complementary strategies [34] [35]:

- Grey Literature Databases: Search specialized databases like OpenGrey.

- Customized Google Search Engines: Use advanced search operators and site-specific searches (e.g.,

site:epa.gov microplastics fish) [34]. - Targeted Website Searching: Identify and search relevant organizational websites (e.g., environmental protection agencies, research institutes) [34].

- Consultation with Experts: Contact researchers and professionals in the field to identify ongoing or unpublished studies [34].

Experimental Protocol: Building and Executing the Search

This section provides a detailed, step-by-step methodology.

Search Strategy Formulation

- Step 1: Extract Key Concepts. Using the PICOS framework, identify the main concepts of your review question. For example: "What is the effect of neonicotinoid pesticides (I) on pollinator mortality (O) in field-based studies (S)"?

- Step 2: Generate Keyword List. For each concept, compile a comprehensive list of synonyms, related terms, and variant spellings.

- Example: Neonicotinoids → "neonicotinoid", "imidacloprid", "clothianidin", "thiamethoxam".

- Example: Pollinator mortality → "mortality", "death", "lethality", "survival".

- Step 3: Identify Controlled Vocabulary. In each database, identify the relevant controlled vocabulary terms (e.g., MeSH in PubMed: "Neonicotinoids", "Insects", "Mortality").

- Step 4: Combine Terms with Boolean Logic. Structure your search strategy as demonstrated below.

Figure 2: Search Term Combination Logic

Mitigating Language Bias

To prevent the systematic exclusion of non-English studies, which constitutes language bias, implement the following in your protocol:

- No Language Restrictions: Do not apply language filters in your database searches.

- Inclusion of Non-English Studies: Explicitly state in the protocol that studies in all languages will be included at the search stage.

- Translation Plan: Develop a practical plan for translating the titles and abstracts of non-English studies to inform inclusion decisions. This may involve using translation software or seeking assistance from colleagues or professional services.

Search Execution and Documentation

- Step 1: Translate the Strategy. Adapt the core search strategy for the syntax and controlled vocabulary of each database [33].

- Step 2: Run and Record Searches. Execute the search for each database and grey literature source. Record the exact search string, the date of search, and the number of records retrieved for each source. Use citation management software (e.g., EndNote, Zotero) or systematic review platforms (e.g., Covidence) to collate results and remove duplicates [33].

- Step 3: Report Transparently. The full search strategy for at least one major database (e.g., Web of Science) should be included in an appendix to the review. The screening process should be documented using a PRISMA flow diagram [33].

The Scientist's Toolkit: Essential Reagents for Systematic Searching

Table 3: Essential Digital Tools for Systematic Review Searching

| Tool / Resource | Function | Application Note |

|---|---|---|

| Bibliographic Databases (Web of Science, Scopus, etc.) | Primary sources for published academic literature. | Strategies must be translated for each platform's unique query language and controlled vocabulary. |

| Reference Management Software (EndNote, Zotero) | Manages citations, PDFs, and facilitates duplicate removal. | Essential for handling the large volume of records retrieved from multiple databases. |

| Systematic Review Platforms (Covidence, Rayyan) | Web-based tools for collaborative screening of titles/abstracts and full-texts. | Streamlines the screening process, manages conflicts, and generates PRISMA flow diagrams. |

| Grey Literature Databases (OpenGrey) | Catalogues reports, theses, and other non-commercially published material. | A structured search of these sources is necessary to minimize publication bias [34]. |

| Advanced Google Searching | Uncovers relevant documents on institutional and government websites. | Using site: and filetype: operators can target searches effectively (e.g., site:epa.gov filetype:pdf). |

| PRISMA Statement & Flow Diagram | Reporting standards for systematic reviews and meta-analyses. | Provides a checklist and a standardized flow diagram to document the study selection process [33]. |

| Calicheamicin | Calicheamicin|DNA-Targeting ADC Payload|Research Use | Calicheamicin, a potent enediyne antitumor antibiotic causing DNA double-strand breaks. For Research Use Only. Not for human or veterinary use. |

| Saframycin F | Saframycin F|Antitumor Compound|For Research | Saframycin F is a potent antitumor antibiotic for research into DNA alkylation and cancer biology. This product is for Research Use Only. |

Within the framework of a thesis on systematic review methods in ecotoxicology, the step of study selection—defining and applying inclusion and exclusion criteria—is a critical determinant of the review's validity and reliability. Ecotoxicology systematically investigates the effects of toxic chemicals on terrestrial, freshwater, and marine ecosystems, examining impacts from the individual to the community level [8]. This field inherently deals with a complex tapestry of diverse taxa (from invertebrates and fish to terrestrial wildlife) and multiple biomes, making the establishment of robust, pre-defined eligibility criteria more challenging, and more crucial, than in many other disciplines [36]. A poorly defined selection process can introduce bias, threaten the transparency of the synthesis, and ultimately undermine the utility of the review for regulators and researchers [37] [36]. This protocol provides detailed application notes for navigating these complexities, ensuring a systematic and objective study selection process.

Defining Eligibility Criteria for Ecotoxicological Contexts

The Population, Intervention, Comparator, Outcome (PICO) framework, or its ecotoxicological adaptation PECO/T (Population, Exposure, Comparator, Outcome/Time), is the cornerstone for developing a focused research question and corresponding eligibility criteria [3] [38]. In ecotoxicology, these elements require careful consideration to handle the field's diversity.

- Population: Criteria must specify the relevant taxa and life stages (e.g., freshwater benthic macroinvertebrates, early life stages of salmonid fish). Biome and habitat (e.g., temperate forest soils, coral reefs) should be defined using explicit classifications (e.g., FAO biome system, specific soil types). Considerations of organism sex, age, and health status may also be relevant [39] [40].

- Exposure/Intervention: Define the chemical stressor(s) of interest, including specific compounds or classes. Pre-specify requirements for exposure characterization, such as measured (not just nominal) concentrations, route of exposure (dietary, waterborne), and duration (acute vs. chronic) [36].

- Comparator: The comparator is typically an unexposed control group or a group exposed to a reference level of the stressor. Criteria should state what constitutes an acceptable control for the included study designs [38].