A Comprehensive Guide to Control Performance Test Reporting for Robust Biomedical Research

This article provides a comprehensive framework for control performance test reporting, tailored for researchers, scientists, and professionals in drug development.

A Comprehensive Guide to Control Performance Test Reporting for Robust Biomedical Research

Abstract

This article provides a comprehensive framework for control performance test reporting, tailored for researchers, scientists, and professionals in drug development. It addresses the critical need for robust validation of analytical methods and control systems, from establishing foundational principles and selecting appropriate methodologies to troubleshooting common issues and performing rigorous comparative analysis. The guidance is designed to enhance the reliability, reproducibility, and regulatory compliance of performance data in biomedical and clinical research.

Core Principles and Definitions: Building a Foundation for Robust Performance Testing

Quantitative Foundation: Data Trends in Modern Testing

The following tables synthesize key quantitative findings from recent research and regulatory analyses, highlighting the adoption of New Approach Methodologies (NAMs) and Artificial Intelligence (AI) in biomedical sciences.

Table 1: Analysis of AI in Biomedical Sciences (Scoping Review of 192 Studies) [1]

| Scope of Analysis | Key Finding | Details from Review |

|---|---|---|

| By Model | Machine Learning Dominance | Machine learning was the most frequently reported AI model in the literature. |

| By Discipline | Microbiology Leads Application | The discipline most commonly associated with AI applications was microbiology, followed by haematology and clinical chemistry. |

| By Region | Concentration in High-Income Countries | Publications on AI in biomedical sciences mainly originate from high-income countries, particularly the USA. |

| Opportunities | Efficiency, Accuracy, and Applicability | Major reported opportunities include improved efficiency, accuracy, universal applicability, and real-world application. |

| Limitations | Complexity and Robustness | Primary limitations include model complexity, limited applicability in some contexts, and concerns over algorithm robustness. |

Table 2: Regulatory and Policy Shifts in Testing Models (2025) [2] [3] [4]

| Agency / Report | Policy Objective | Timeline & Key Metrics |

|---|---|---|

| U.S. FDA | Phase out conventional animal testing for monoclonal antibodies (mAbs). | Plan to leverage New Approach Methodologies (NAMs) within 3-5 years [2]. |

| U.S. GAO | Scale NAMs from promise to practice; address technical and structural barriers. | 2025 report identifies limited cell availability, lack of standards, and regulatory uncertainty as key challenges [3]. |

| U.S. EPA | Reduce vertebrate animal testing in chemical assessments. | 2025 report concludes many statutes are broadly written and do not preclude the use of NAMs [4]. |

Core Experimental Protocols

Protocol: Proficiency Testing (PT) / External Quality Assessment (EQA)

This protocol ensures analytical quality and comparability of laboratory results, a cornerstone of control performance testing [5].

- 1. Objective: To monitor a laboratory's analytical performance by comparing its results against a peer group and assigned values, ensuring accuracy and reliability.

- 2. Materials & Reagents:

- PT/EQA specimens from a certified provider (e.g., College of American Pathologists).

- All standard laboratory instruments, calibrators, and reagents used for routine patient testing.

- 3. Methodology:

- Specimen Handling: Process PT/EQA specimens exactly as patient specimens are processed, using the same standard operating procedures.

- Analysis: Perform the analysis for the target analyte(s) in the same batch and with the same personnel as routine patient testing.

- Data Reporting: Report results to the PT provider within the specified deadline, including all required data (e.g., PT result, instrument, method, and reagent information).

- Performance Evaluation: Upon receiving the evaluation report, compare your laboratory's result to the peer group. Performance is often graded using the Standard Deviation Index (SDI), calculated as:

(Laboratory's Result - Peer Group Mean) / Peer Group Standard Deviation[5].

- 4. Interpretation & Corrective Action:

- Acceptable Performance: The SDI is within the predefined acceptable limits (e.g., within ±2 SD).

- Unacceptable Performance: An unacceptable result triggers a mandatory process improvement assessment. The cause must be investigated, which may range from staff retraining to a full assay re-validation [5].

Protocol: Validation of NAMs for Control Performance

This outlines a general framework for establishing the predictive accuracy of Non-Animal Models as control systems.

- 1. Objective: To qualify and validate a NAM (e.g., organ-on-a-chip, in silico model) for use in preclinical safety assessment, ensuring it provides a robust and human-relevant measure of biological response.

- 2. Materials & Reagents:

- Human-based biological system (e.g., stem-cell derived organoids, primary human cells).

- Microphysiological system (MPS) or bioreactor.

- Analytical endpoints (e.g., transcriptomic profiling, biomarker release, imaging).

- 3. Methodology:

- Benchmarking: Challenge the NAM with compounds of known effect in humans (both positive and negative controls).

- Dosing & Exposure: Apply controlled, longitudinal exposures to the test articles, mimicking in vivo conditions where possible [3].

- Endpoint Analysis: Measure a comprehensive set of endpoints (e.g., cell viability, tissue integrity, functional output, genomic and metabolic profiles) to create a rich data set.

- Data Integration: Use a Weight-of-Evidence (WOE) assessment to integrate results from multiple NAMs and existing preclinical/clinical data to justify safety conclusions [2].

- 4. Interpretation & Validation:

- The model's performance is quantified by its predictive accuracy for known human outcomes.

- Successful validation requires the model to be reproducible, standardized, and its limitations fully acknowledged [3].

Troubleshooting Guides and FAQs

Q1: Our laboratory reported a result that was graded as unacceptable due to a clerical error (e.g., a typo). Can this be regraded? No, clerical errors cannot be regraded. You must document that your laboratory performed a self-evaluation and compared its result to the intended response. This incident should trigger a review of procedures, potentially including additional staff training or implementing a second reviewer for result entry [5].

Q2: What is the first step after receiving an unacceptable PT result? Initiate a process improvement assessment. The cause of the unacceptable response must be determined. For a single error, this may involve targeted training. However, an unsuccessful event (failing the overall score for the program) requires a comprehensive assessment and corrective action for each unacceptable result [5].

Q3: For a calculated analyte like LDL Cholesterol, should we report the calculated value or the directly measured value for PT? You should only report results for direct analyte measurements. For most calculated analytes (e.g., LDL cholesterol, total iron-binding capacity), the PT/EQA is designed to assess the underlying direct measurements. These calculated values should not be reported unless specifically requested [5].

Q4: What are the common pitfalls in reporting genetic variants for biochemical genetics PT?

Common unacceptable errors include: using "X" to indicate a stop codon; adding extra spaces (e.g., c.145 G>A); incorrect usage of upper/lowercase letters; and missing punctuation. Laboratories must conform to the most recent HGVS recommendations [5].

Q5: What is the fundamental purpose of IRB review of informed consent? The fundamental purpose is to assure that the rights and welfare of human subjects are protected. The review ensures subjects are adequately informed and that their participation is voluntary. It also helps ensure the institution complies with all applicable regulations [6].

Q6: Can a clinical investigator also serve as a member of the IRB? Yes, however, the IRB regulations prohibit any member from participating in the IRB's initial or continuing review of any study in which the member has a conflicting interest. They may only provide information requested by the IRB and cannot vote on that study [6].

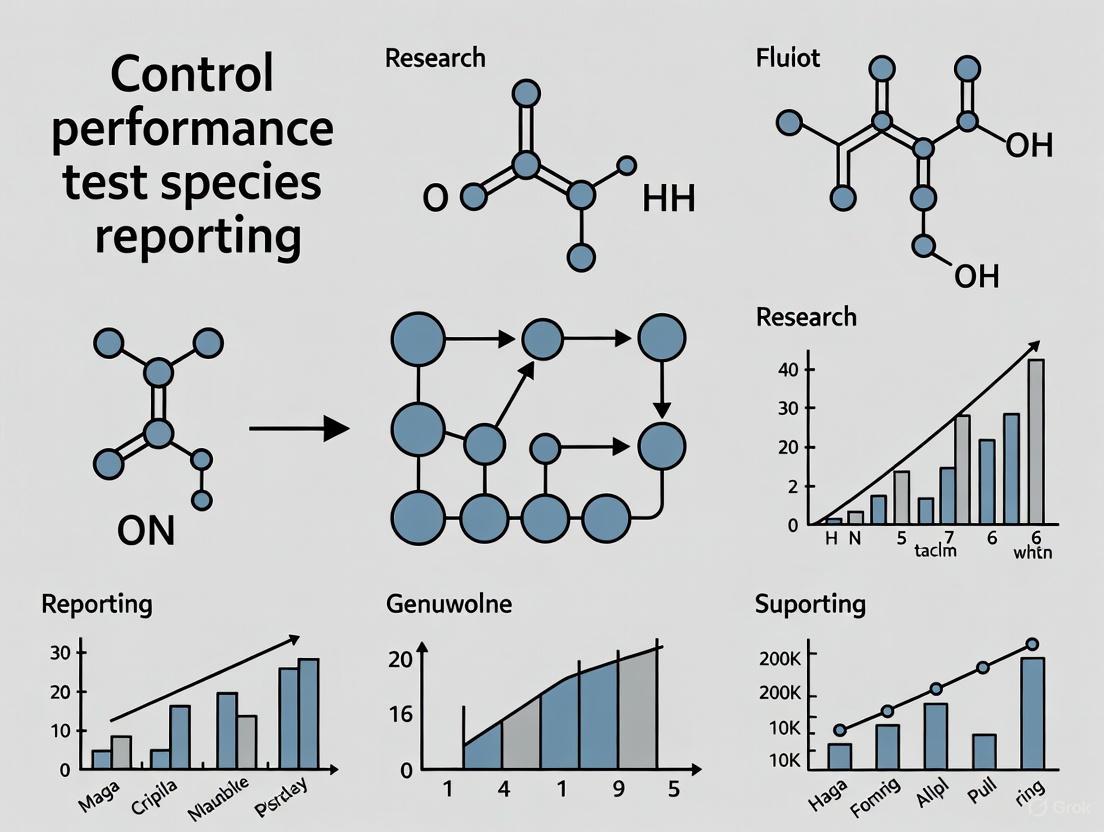

Visual Workflows

PT/EQA Performance Evaluation Logic

NAM Validation and Integration Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Control Performance Testing [5] [3]

| Item / Solution | Function in Experiment |

|---|---|

| Commutable Frozen Human Serum Pools | Serves as accuracy-based PT specimens that behave like patient samples, used for validating method performance in clinical chemistry [5]. |

| Cell Line/Whole Blood Mixtures | Provides robust and consistent challenges for flow cytometry proficiency testing programs, helping to standardize immunophenotyping across laboratories [5]. |

| Stem-Cell Derived Organoids | Provides a human-specific, physiologically dynamic model for disease modeling and toxicity testing, reducing reliance on animal models [3]. |

| High-Quality Diverse Human Cells | The foundational biological component for building representative NAMs (e.g., for organ-on-a-chip systems); access to diverse sources is a current challenge [3]. |

| International Sensitivity Index (ISI) & Mean Normal PT | Critical reagents/information used to calculate and verify the accuracy of the International Normalized Ratio (INR) in hemostasis testing [5]. |

| Standardized Staining Panels | Pre-defined antibody panels for diagnostic immunology and flow cytometry PT, used to ensure consistent antigen detection and reporting across laboratories [5]. |

| Chamaejasmenin D | Chamaejasmenin D, MF:C32H26O10, MW:570.5 g/mol |

| Penamecillin | Penamecillin, CAS:983-85-7, MF:C19H22N2O6S, MW:406.5 g/mol |

FAQs on Performance Metrics in Behavioral Research

Q1: What do "response time" and "latency" measure in an animal cognitive test? In behavioral assays, response time (or latency) measures the total time from the presentation of a stimulus (e.g., a light, sound, or accessible food) to the completion of the subject's targeted behavioral response [7]. Average latency time is the delay that occurs during this processing, calculated from the moment a request is sent until the first byte is received [7]. This is critical for assessing cognitive processing speed, decision-making, and motor execution. A sudden increase in average response time during tests may indicate performance degradation under stress or cognitive load [8].

Q2: How is "throughput" defined in the context of behavioral tasks? In behavioral research, throughput measures the rate of successful task completions per unit of time. It reflects the efficiency of the cognitive process under investigation [8]. A high throughput indicates that an animal can process information and execute correct responses efficiently. A decline in throughput during a spike in task difficulty can indicate that the system is becoming overwhelmed [8].

Q3: Why is the "error rate" a crucial metric, and what does a high rate indicate?

The error rate is the percentage of trials or requests that result in a failed or incorrect response versus the total attempts [7]. It is calculated as (Number of failed requests / Total number of requests) x 100 [7]. A high error rate directly indicates problems with task performance, which could stem from poor experimental design, overly complex tasks, lack of animal motivation, or unaccounted-for external confounds such as those reported in detour task experiments [9].

Q4: What metrics are used to evaluate the "stability" of a testing protocol? Stability refers to the consistency and reliability of results over time and across conditions. Key metrics to assess this include:

- Endurance (Soak) Testing Metrics: Track system performance over an extended period to detect issues like gradual performance degradation or motivational drift [8].

- Repeatability: The low but significant repeatability of inhibitory control performance in wild great tits across years and contexts suggests that the assay captures some intrinsic, stable differences [9].

- Response Time Consistency: Using percentiles (P90, P95) instead of just averages helps gauge the consistency of subject responses, identifying if a few slow reactions are skewing the data [8].

Q5: How can I determine if my behavioral assay is reliably measuring cognitive function and not other factors? A reliable assay minimizes the influence of confounding variables. Key strategies include:

- Control for Confounds: Actively monitor and statistically account for factors like motivation, previous experience, body size, sex, age, and personality, as these can influence task performance [9].

- Use Randomized, Controlled Trials (RCT): Simulations show that randomized designs have much lower false positive and false negative error rates compared to non-randomized studies, leading to more robust inferences about the intervention's effect [10].

- Validate the Assay: An assay should be validated for each specific study system. It is recommended that confounds are likely system and experimental-design specific, and that assays should be validated and refined for each study system [9].

Troubleshooting Common Performance Issues

| Problem | Potential Causes | Investigation & Resolution Steps |

|---|---|---|

| High Error Rate | - Task design is too complex.- Subject is unmotivated (e.g., not food-deprived enough).- Presence of uncontrolled external stimuli (e.g., noise).- Inadequate training or habituation. | - Simplify the task or break it into simpler steps.- Calibrate motivation (e.g., adjust food restriction protocols).- Control the environment to minimize distractions.- Ensure adequate training until performance plateaus. |

| Increased Response Time / Latency | - Cognitive load is too high.- Fatigue or satiation.- Underlying health issues in the subject.- Equipment or software latency. | - Review task demands and reduce complexity if needed.- Shorten session length or ensure testing occurs during the subject's active period.- Perform health checks.- Benchmark equipment to isolate technical from biological latency. |

| Low or Inconsistent Throughput | - Task is not intuitive for the species.- Inter-trial interval is too long.- Low subject engagement or motivation.- Unstable or unreliable automated reward delivery. | - Pilot different task designs to find a species-appropriate one.- Optimize the inter-trial interval to maintain engagement.- Use high-value rewards to boost motivation.- Regularly calibrate and maintain automated systems like feeders. |

| Poor Assay Stability & Repeatability | - High inter-individual variability not accounted for.- "Batch effects" from different experimenters or time of day.- The assay is measuring multiple constructs (e.g., both inhibition and persistence). | - Increase sample size and use blocking in experimental design.- Standardize protocols and blind experimenters to hypotheses.- Conduct validation experiments to confirm the assay is measuring the intended cognitive trait and not other factors [9]. |

Essential Research Reagent Solutions

| Item | Function in Behavioral Research |

|---|---|

| Automated Operant Chamber | A standardized environment for presenting stimuli and delivering rewards, enabling precise measurement of response time, throughput, and error rate. |

| Video Tracking Software | Allows for automated, high-throughput quantification of subject movement, location, and specific behaviors, reducing observer bias. |

| Data Acquisition System | The hardware and software backbone that collects timestamped data from sensors, levers, and touchscreens for calculating all key metrics. |

| Motivational Reagents (e.g., rewards) | Food pellets, sucrose solution, or other positive reinforcers critical for maintaining subject engagement and performance stability across trials. |

| Environmental Enrichment | Items like nesting material and shelters help maintain subjects' psychological well-being, which is foundational for stable and reliable behavioral data. |

| Statistical Analysis Package | Software (e.g., R, SPSS, Python) essential for performing power analysis, calculating percentiles, error rates, and determining the significance of results [11]. |

Experimental Workflow for a Robust Behavioral Study

The diagram below outlines a generalized protocol for designing, executing, and analyzing a behavioral study to ensure reliable measurement of key performance metrics.

Relationship Between Performance Metrics and Study Outcomes

This diagram illustrates how the four core performance metrics interrelate to determine the overall success, reliability, and interpretability of a behavioral study.

Regulatory Landscape and Compliance Requirements for Test Reporting

For researchers, scientists, and drug development professionals, navigating the regulatory landscape for test reporting is a critical component of research integrity and compliance. The year 2025 has ushered in significant regulatory shifts across multiple domains, from financial services to laboratory diagnostics, with a common emphasis on enhanced transparency, data quality, and rigorous documentation [12]. This technical support center addresses the specific compliance requirements and reporting standards relevant to control performance test species reporting research, providing actionable troubleshooting guidance and experimental protocols to ensure regulatory adherence while maintaining scientific validity.

Current Regulatory Framework

Key Regulatory Changes in 2025

The regulatory environment in 2025 is characterized by substantial updates across multiple jurisdictions and domains. Understanding these changes is fundamental to compliant test reporting practices.

Table: Major Regulatory Changes Effective in 2025

| Regulatory Area | Governing Body | Key Changes | Compliance Deadlines |

|---|---|---|---|

| Laboratory Developed Tests (LDTs) | U.S. Food and Drug Administration (FDA) | Phased implementation of LDT oversight as medical devices [13]. | Phase 1: May 6, 2025 (MDR systems); Full through 2028 [13]. |

| Point-of-Care Testing (POCT) | Clinical Laboratory Improvement Amendments (CLIA) | Updated proficiency testing (PT) standards, revised personnel qualifications [14]. | Effective January 2025 [14]. |

| Securities Lending Transparency | U.S. Securities and Exchange Commission (SEC) & FINRA | SEC 10c-1a rule; reduced reporting fields, removed lifecycle event reporting [15]. | Implementation date: January 2, 2026 [15]. |

| Canadian Derivatives Reporting | Canadian Securities Administrators (CSA) | Alignment with CFTC requirements; introduction of UPI and verification requirements [15]. | Go-live: July 2025 [15]. |

Overarching Regulatory Trends

Several cross-cutting trends define the 2025 regulatory shift, as identified by KPMG's analysis [12]. These include:

- Regulatory Divergence: Increasingly inconsistent regulations across jurisdictions, requiring adaptable compliance strategies.

- Focus on Technology and Data Risks: Heightened scrutiny on AI applications, cybersecurity, and data protection.

- Enhanced Third-Party Risk Management: Growing requirements for oversight of vendors and service providers.

- Strengthened Governance and Controls: Continued high expectations for risk management frameworks and internal controls.

Essential Reporting Guidelines for Research

Adherence to established reporting guidelines is fundamental to producing reliable, reproducible research, particularly when involving test species.

ARRIVE 2.0 Guidelines for Animal Research

The ARRIVE (Animal Research: Reporting of In Vivo Experiments) guidelines 2.0 represent the current standard for reporting animal research [16] [17]. Developed by the NC3Rs (National Centre for the Replacement, Refinement & Reduction of Animals in Research), these evidence-based guidelines provide a checklist to ensure publications contain sufficient information to be transparent, reproducible, and added to the knowledge base.

The guidelines are organized into two tiers:

- The ARRIVE Essential 10: The minimum reporting requirement that allows readers to assess the reliability of findings.

- The Recommended Set: Additional items that provide further context to the study.

Table: The ARRIVE Essential 10 Checklist [16]

| Item Number | Item Description | Key Reporting Requirements |

|---|---|---|

| 1 | Study Design | Groups compared, control group rationale, experimental unit definition. |

| 2 | Sample Size | Number of experimental units per group, how sample size was determined. |

| 3 | Inclusion & Exclusion Criteria | Criteria for including/excluding data/animals, pre-established if applicable. |

| 4 | Randomisation | Method of sequence generation, allocation concealment, implementation. |

| 5 | Blinding | Who was blinded, interventions assessed, how blinding was achieved. |

| 6 | Outcome Measures | Pre-specified primary/secondary outcomes, how they were measured. |

| 7 | Statistical Methods | Details of statistical methods, unit of analysis, model adjustments. |

| 8 | Experimental Animals | Species, strain, sex, weight, genetic background, source/housing. |

| 9 | Experimental Procedures | Precise details of procedures, anesthesia, analgesia, euthanasia. |

| 10 | Results | For each analysis, precise estimates with confidence intervals. |

Additional Relevant Reporting Guidelines

Beyond ARRIVE, researchers should be aware of other pertinent reporting guidelines:

- CONSORT 2025 Statement: Updated guideline for reporting randomised trials, published simultaneously in multiple major journals in 2025 [18].

- CONSORT Extensions: Various specialized extensions exist for different trial types (e.g., non-inferiority, cluster, pragmatic) [18].

Troubleshooting Guides and FAQs

FAQ: Regulatory Compliance and Reporting Standards

Q1: What is the most critical change for laboratories developing their own tests in 2025? The FDA's final rule on Laboratory Developed Tests (LDTs) represents the most significant change, phasing in comprehensive oversight through 2028. The first deadline (May 6, 2025) requires implementation of Medical Device Reporting (MDR) systems and complaint file management. Laboratories must immediately begin assessing their current LDTs against the new requirements, focusing on validation protocols and quality management systems [13].

Q2: Our research involves animal models. What is the single most important reporting element we often overlook? Based on the ARRIVE guidelines, researchers most frequently underreport elements of randomization and blinding. Transparent reporting requires specifying the method used to generate the random allocation sequence, how it was concealed until interventions were assigned, and who was blinded during the experiment and outcome assessment. This information is crucial for reviewers to assess potential bias [16].

Q3: How have personnel qualification requirements changed for point-of-care testing in 2025? CLIA updates mean that nursing degrees no longer automatically qualify as equivalent to biological science degrees for high-complexity testing. However, new equivalency pathways allow nursing graduates to qualify through specific coursework and credit requirements. Personnel who met qualifications before December 28, 2024, are "grandfathered" in their roles [14].

Q4: What are the common deficiencies in anti-money laundering (AML) compliance that might parallel issues in research data management? FINRA has identified that firms often fail to properly classify relationships, leading to inadequate verification and insufficient identification of suspicious activity. Similarly, in research, failing to properly document all data relationships and transformations can compromise data integrity. The solution is implementing clear, documented procedures for data handling and verification throughout the research lifecycle [19].

Q5: How should we approach the use of Artificial Intelligence (AI) in our research and reporting processes? Regulatory bodies are emphasizing that existing rules apply regardless of technology. For AI tools, especially third-party generative AI, you must:

- Conduct pre-implementation assessment for compliance with reporting standards.

- Implement supervision strategies and risk mitigation for accuracy and bias.

- Address cybersecurity concerns, including potential data leaks.

- Maintain human oversight and validation of AI-generated content or analyses [19].

Troubleshooting Common Experimental Protocol Issues

Issue: Inconsistent results across repeated experiments with animal models.

- Potential Cause 1: Inadequate reporting of housing conditions and environmental variables.

- Solution: Implement standardized environmental monitoring and reporting protocols. Document and report temperature, humidity, light cycles, and housing density for all experimental subjects as specified in ARRIVE Item 8 [16].

- Potential Cause 2: Uncontrolled experimenter effects or expectation bias.

- Solution: Enhance blinding protocols where feasible. Document who was blinded and how in experimental records (ARRIVE Item 5) [16].

Issue: Difficulty reproducing statistical analyses during peer review.

- Potential Cause: Insufficient detail in statistical methods reporting.

- Solution: Adopt the ARRIVE Item 7 requirements, specifying exact statistical tests used, software/version, unit of analysis, and any data transformations. Provide precise estimates with confidence intervals rather than just p-values [16].

Experimental Protocols and Workflows

Protocol for Implementing ARRIVE 2.0 Guidelines

This protocol ensures compliant reporting for studies involving test species.

Phase 1: Pre-Experimental Planning

- Study Design Documentation: Define all experimental groups, including control groups, with clear rationale. Determine the experimental unit (e.g., individual animal, litter) [16].

- Sample Size Justification: Perform an a priori sample size calculation using appropriate statistical methods, citing the method and software used.

- Pre-registration (Recommended): Consider pre-registering the study design, primary outcomes, and analysis plan to enhance rigor.

Phase 2: Experimental Execution

- Randomization Implementation: Use a validated random allocation method (e.g., computer-generated) with allocation concealment.

- Blinding Procedures: Implement blinding of caregivers and outcome assessors where possible. Document blinding methods.

- Outcome Measurement Standardization: Train all personnel on standardized measurement techniques for all outcome measures.

Phase 3: Data Analysis and Reporting

- Statistical Analysis: Follow the pre-specified analysis plan. Account for any missing data or protocol deviations.

- Comprehensive Reporting: Use the ARRIVE Essential 10 checklist as a manuscript preparation guide, ensuring all items are addressed.

ARRIVE 2.0 Implementation Workflow

Protocol for Addressing Regulatory Compliance for LDTs

This protocol addresses the new FDA requirements for Laboratory Developed Tests.

Phase 1: Assessment and Gap Analysis (Months 1-2)

- Inventory LDTs: Catalog all LDTs currently offered, categorizing by risk level.

- Gap Analysis: Compare current practices against FDA Phase 1 requirements (MDR systems, complaint files).

Phase 2: System Implementation (Months 3-4)

- Medical Device Reporting: Establish systems for reporting adverse events as required by 21 CFR Part 803.

- Complaint Management: Implement procedures for receiving, reviewing, and investigating complaints.

Phase 3: Preparation for Subsequent Phases (Months 5-6)

- Quality Systems Development: Begin developing comprehensive quality systems for Phase 3 (2027).

- Premarket Review Planning: Identify higher-risk LDTs that will require premarket review in Phases 4-5.

LDT Compliance Implementation Timeline

The Scientist's Toolkit: Essential Research Reagents and Materials

Table: Essential Research Reagents and Materials for Compliant Test Reporting

| Item/Reagent | Function/Application | Reporting Considerations |

|---|---|---|

| Standardized Control Materials | Quality control for experimental procedures and test systems. | Document source, lot number, preparation method, and storage conditions (ARRIVE Item 9) [16]. |

| Validated Assay Kits | Consistent measurement of outcome variables. | Report complete product information, validation data, and any modifications to manufacturer protocols. |

| Data Management System | Secure capture, storage, and retrieval of experimental data. | Must maintain audit trails and data integrity in compliance with ALCOA+ principles. |

| Statistical Analysis Software | Implementation of pre-specified statistical analyses. | Specify software, version, and specific procedures/packages used (ARRIVE Item 7) [16]. |

| Sample Tracking System | Management of sample chain of custody and storage conditions. | Critical for documenting inclusion/exclusion criteria and handling of experimental units. |

| Environmental Monitoring Equipment | Tracking of housing conditions for animal subjects. | Essential for reporting housing and husbandry conditions (ARRIVE Item 8) [16]. |

| Electronic Laboratory Notebook (ELN) | Documentation of experimental procedures and results. | Supports reproducible research and regulatory compliance through timestamped, secure record-keeping. |

| neoechinulin A | neoechinulin A, MF:C19H21N3O2, MW:323.4 g/mol | Chemical Reagent |

| Sanggenon D | Sanggenon D, MF:C40H36O12, MW:708.7 g/mol | Chemical Reagent |

FAQs on Data Splitting Fundamentals

1. What is the purpose of splitting data into training, validation, and test sets? Splitting data is fundamental to building reliable machine learning models. Each subset serves a distinct purpose [20]:

- Training Set: This is the data used to fit the model's parameters (e.g., weights in a neural network). The model sees and learns from this data [21].

- Validation Set: This set provides an unbiased evaluation of a model fit on the training data while tuning the model's hyperparameters (e.g., learning rate, number of layers). It acts as a checkpoint during development to prevent overfitting [22] [20].

- Test Set (or Blind Test Set): This set is used to provide a final, unbiased evaluation of a model that has been fully trained and tuned. It is the gold standard for assessing how the model will perform on new, unseen data in the real world [22] [21].

2. Why is a separate "blind" test set considered critical? A separate test set that is completely isolated from the training and validation process is crucial for obtaining a true estimate of a model's generalization ability [20]. If you use the validation set for final evaluation, it becomes part of the model tuning process, and the resulting performance metric becomes an over-optimistic estimate, a phenomenon known as information leakage. The blind test set ensures the model is evaluated on genuinely novel data, which is the ultimate test of its utility in real-world applications, such as predicting drug efficacy or toxicity [23] [24].

3. How do I choose the right split ratio for my dataset? The optimal split ratio depends on the size and nature of your dataset. There is no single best rule, but common practices and considerations are summarized in the table below [25] [20] [26]:

| Dataset Size | Recommended Split (Train/Val/Test) | Key Considerations & Methods |

|---|---|---|

| Very Large Datasets (e.g., millions of samples) | 98/1/1 or similar | With ample data, even a small percentage provides sufficient samples for reliable validation and testing. |

| Medium to Large Datasets | 70/15/15 or 80/10/10 | A balanced approach that provides enough data for both learning and evaluation. |

| Small Datasets | 60/20/20 | A larger portion is allocated for evaluation due to the limited data pool. |

| Very Small Datasets | Avoid simple splits; use Cross-Validation | Techniques like k-fold cross-validation use the entire dataset for both training and validation, providing a more robust evaluation. |

4. What is data leakage, and how can I avoid it in my experiments? Data leakage occurs when information from outside the training dataset, particularly from the test set, is used to create the model. This leads to overly optimistic performance that won't generalize. To avoid it [26]:

- Completely Isolate the Test Set: The test set should not be used for any aspect of model training, including hyperparameter tuning or feature selection [20].

- Preprocess with Care: Any scaling or normalization should be fit on the training data and then applied to the validation and test sets. Fitting a scaler on the entire dataset before splitting is a common source of leakage.

- Be Wary of Temporal Leakage: For time-series or sequential data (common in patient records), ensure the test set contains data from a future time period compared to the training data. Using a future data point to predict a past event invalidates the evaluation [27] [28].

Troubleshooting Guide: Common Data Splitting Issues

| Problem | Likely Cause | Solution | Relevant to Drug Development Context |

|---|---|---|---|

| High Training Accuracy, Low Test Accuracy | Overfitting: The model has memorized the training data, including its noise and outliers, rather than learning to generalize. | • Simplify the model.• Apply regularization techniques (L1, L2).• Increase the size of the training data.• Use early stopping with the validation set [22] [20]. | A model overfit to in vitro assay data may fail to predict in vivo outcomes. |

| Large Discrepancy Between Validation and Test Performance | Information Leakage or the validation set was used for too many tuning rounds, effectively overfitting to it. | • Ensure the test set is completely blinded and untouched until the final evaluation.• Use a separate validation set for tuning, not the test set [20] [26]. | Crucial when transitioning from a validation cohort (e.g., cell lines) to a final blind test (e.g., patient-derived organoids) [24]. |

| Unstable Model Performance Across Different Splits | The dataset may be too small, or a single random split may not be representative of the underlying data distribution. | • Use k-fold cross-validation for a more robust estimate of model performance.• For imbalanced datasets (e.g., rare adverse events), use stratified splitting to maintain class ratios in each subset [26] [21]. | Essential for rare disease research or predicting low-frequency toxicological events to ensure all subsets contain representative examples. |

| Model Fails on New Real-World Data Despite Good Test Performance | Inadequate Data Splitting Strategy: A random split may have caused the test set to be too similar to the training data, failing to assess true generalization. | • For temporal data, use a global temporal split where the test set is from a later time period than the training set [27] [28].• Ensure the test data spans the full range of scenarios the model will encounter. | A model trained on historical compound data may fail on newly discovered chemical entities if the test set doesn't reflect this "future" reality. |

Experimental Protocol: Implementing a Robust Train-Validation-Test Split

This protocol outlines the steps for a robust data splitting strategy, critical for generating reliable and reproducible models in research.

1. Objective To partition a dataset into training, validation, and blind test subsets that allow for effective model training, unbiased hyperparameter tuning, and a final evaluation that accurately reflects real-world performance.

2. Materials and Reagents (The Scientist's Toolkit)

| Item / Concept | Function in the Experiment |

|---|---|

| Full Dataset | The complete, pre-processed collection of data points (e.g., molecular structures, toxicity readings, patient response metrics). |

| sklearn.modelselection.traintest_split | A widely used Python function for randomly splitting datasets into subsets [25] [26]. |

| Random State / Seed | An integer value used to initialize the random number generator, ensuring that the data split is reproducible by anyone who runs the code [25]. |

| Stratification | A technique that ensures the relative class frequencies (e.g., "toxic" vs. "non-toxic") are preserved in each split, which is vital for imbalanced datasets [26]. |

| Computational Environment (e.g., Python, Jupyter Notebook) | The software platform for executing the data splitting and subsequent machine learning tasks. |

3. Methodology

Step 1: Data Preprocessing and Initial Shuffling

- Clean the entire dataset (handle missing values, remove outliers, etc.).

- Shuffle the data randomly to avoid any order-related biases [26].

Step 2: Initial Split - Separate the Test Set

- The first split is performed to isolate the blind test set. This data will be locked away and not used again until the very end.

- A typical initial split is 80% for training/validation and 20% for testing, but this should be adjusted based on the dataset size considerations in the FAQ above.

- Code Example:

Step 3: Secondary Split - Separate the Validation Set

- The remaining data (

X_temp,y_temp) is now split again to create the training set and the validation set. - This split is performed on the

X_tempset. For example, to get a 15% validation set of the original data, you would use0.15 / 0.80 = 0.1875of theX_tempset. - Code Example: Final proportions: Training (64%), Validation (16%), Test (20%).

Step 4: Workflow Execution and Final Evaluation

- The model is trained on

X_trainandy_train. - Hyperparameters are tuned by evaluating performance on

X_valandy_val. - Once the model is finalized, it is evaluated exactly once on the blind test set (

X_test,y_test) to report the final, unbiased performance metrics.

Data Splitting Workflow

The following diagram illustrates the sequential workflow for splitting your dataset and how each subset is used in the model development lifecycle.

Establishing Performance Benchmarks and Acceptance Criteria

In the context of control performance test species reporting research, establishing rigorous performance benchmarks and acceptance criteria is fundamental to ensuring the validity, reliability, and reproducibility of experimental data. For researchers, scientists, and drug development professionals, these criteria serve as the objective standards against which a system's or methodology's performance is measured. They define the required levels of speed, responsiveness, stability, and scalability for your experimental processes and data reporting systems. A performance benchmark is a set of metrics that represent the validated behavior of a system under normal conditions [29], while acceptance criteria are the specific, measurable conditions that must be met for the system's performance to be considered successful [30]. Clearly defining these elements is critical for preventing performance degradations that are often preventable and for ensuring that your research outputs meet the requisite service-level agreements and scientific standards [29].

Key Performance Metrics and Benchmarks

The first step in establishing a performance framework is to define the quantitative metrics that will be monitored. The table below summarizes the key performance indicators critical for assessing research and reporting systems.

Table 1: Key Performance Metrics for Research Systems

| Metric | Description | Common Benchmark Examples |

|---|---|---|

| Response Time [31] | Time between sending a request and receiving a response. | Critical operations (e.g., data analysis, complex queries) should complete within a defined threshold, such as 2-4 seconds [29] [30]. |

| Throughput [31] | Amount of data transferred or transactions processed in a given period (e.g., Requests Per Second). | System must process a defined number of data transactions or analysis jobs per second [31]. |

| Resource Utilization [31] | Percentage of CPU and Memory (RAM) consumed during processing. | CPU and memory usage must remain below a target level (e.g., 75%) under normal load to ensure system stability [31]. |

| Error Rate [31] | Percentage of requests that result in errors compared to the total number of requests. | The system error rate must not exceed 1% during sustained peak load conditions [30]. |

| Concurrent Users [31] | Number of users or systems interacting with the platform simultaneously. | The application must support a defined number of concurrent researchers accessing and uploading data without performance degradation [31]. |

These metrics should be gathered under test conditions that closely mirror your production research environment to ensure the data is measurable and actionable [29].

Defining Acceptance Criteria for Performance

Acceptance criteria translate performance targets into specific, verifiable conditions for success. They are the definitive rules used to judge whether a system meets its performance requirements.

Core Components of Performance Acceptance Criteria

Effective performance acceptance criteria should include [30]:

- Performance Metrics: Quantifiable measures like response time and throughput.

- Scalability Requirements: Definitions of how the system should perform as the number of users, data volume, or transaction frequency increases.

- Load Conditions: Specification of the expected load, such as the number of concurrent users or transactions per second.

- Error Tolerance: Outline of acceptable error rates under various load conditions.

- Testing Scenarios: Details on how performance will be validated, including tools and environments.

Examples of Performance-Focused Acceptance Criteria

Table 2: Example Acceptance Criteria for Research Scenarios

| Research Scenario | Sample Acceptance Criteria |

|---|---|

| Data Analysis Query | The database query for generating a standard pharmacokinetic report must complete within 5 seconds for 95% of executions when the system is under a load of 50 concurrent users [30]. |

| Experimental Data Upload | The system must allow a researcher to upload a 1GB dataset within 3 minutes, with a throughput of no less than 5.6 MB/sec, while 20 other users are performing routine tasks. |

| Central Reporting Dashboard | The dashboard must load all visualizations and summary statistics within 4 seconds for 99% of page requests, with a server-side API response time under 2 seconds [30]. |

When defining these criteria, it is vital to focus on user requirements and expectations to ensure the delivered work meets researcher needs and scientific rigor [29].

Performance Testing Methodology and Protocols

To validate your benchmarks and acceptance criteria, a structured testing protocol is essential. Performance testing involves evaluating a system's response time, throughput, resource utilization, and stability under various scenarios [29].

Types of Performance Tests

Select the test type based on the specific performance metrics and acceptance criteria you need to verify [29].

Table 3: Protocols for Performance Testing

| Test Type | Protocol Description | Primary Use Case in Research |

|---|---|---|

| Load Testing [29] [31] | Simulate realistic user loads to measure performance under expected peak workloads. | Determines if the data reporting system can handle the maximum expected number of researchers submitting results simultaneously. |

| Stress Testing [29] [31] | Push the system beyond its normal limits to identify its breaking points and measure its ability to recover. | Determines the resilience of the laboratory information management system (LIMS) and identifies the maximum capacity of the data pipeline. |

| Soak Testing (Endurance) [29] [31] | Run the system under sustained high loads for an extended period (e.g., several hours or days). | Evaluates the stability and reliability of long-running computational models or data aggregation processes; helps identify memory leaks or resource degradation. |

| Spike Testing [29] [31] | Simulate sudden, extreme surges in user load over a short period. | Measures the system's ability to scale and maintain performance during peak periods, such as the deadline for a multi-center trial report submission. |

Experimental Workflow for Performance Validation

The following diagram illustrates the logical workflow for establishing benchmarks and executing a performance testing cycle.

Diagram 1: Performance Validation Workflow

Troubleshooting Common Performance Issues

Despite a well-defined testing protocol, performance issues can arise. This section provides guidance in a question-and-answer format to help researchers and IT staff diagnose common problems.

Q1: Our data analysis query is consistently missing its target response time. What are the first steps we should take?

A: Follow a structured investigation path:

- Check Resource Bottlenecks: Use monitoring tools to verify CPU, memory, and disk I/O utilization on the database server. Sustained high usage (e.g., >80%) indicates a resource bottleneck [31].

- Analyze the Query: Examine the query execution plan to identify inefficient operations, such as full table scans instead of indexed lookups.

- Review Test Environment: Ensure your test environment is an accurate mirror of production. Even minor differences in hardware, software versions, or dataset size can drastically alter performance [29].

- Check Concurrency: Verify if the slow response occurs under load or in isolation. If it only happens under load, investigate locking/blocking between concurrent transactions.

Q2: During stress testing, our application fails with a high number of errors. How do we isolate the root cause?

A: A high error rate under load often points to stability or resource issues.

- Categorize Errors: Use your APM and test tool's error report to classify the errors (e.g., HTTP 500, timeout, connection refused) [32]. Different errors point to different root causes.

- Analyze Application Logs: Inspect the application and web server logs from the time of the test for stack traces or warning messages that precede the errors [32].

- Profile the Code: Use profiling tools to identify areas of the code that consume the most resources (CPU, memory) during the test. This can reveal inefficient algorithms or memory leaks [29].

- Check External Dependencies: Determine if the errors are originating from your application or a downstream service (e.g., a database, external API). If a downstream service is failing, it will cause cascading failures in your system.

Q3: The system performance meets benchmarks initially but degrades significantly during a long-duration (soak) test. What does this indicate?

A: Performance degradation over time is a classic symptom uncovered by soak testing. Potential causes include [31]:

- Memory Leak: The application allocates memory but fails to release it, causing consumption to grow until the system runs out of memory. Profiling tools are essential for detecting this.

- Resource Exhaustion: A gradual filling of connection pools, thread pools, or disk space.

- Database Growth: An increase in the size of database tables or indexes during the test can slow down query performance.

- Inefficient Cache Configuration: A cache that is too small or has a poor eviction policy can lead to increasing load on the database over time.

The Scientist's Toolkit: Essential Research Reagents and Solutions

In performance test species reporting, the "reagents" are the tools and technologies that enable rigorous testing and monitoring.

Table 4: Key Research Reagent Solutions for Performance Testing

| Tool / Solution | Primary Function | Use Case in Performance Testing |

|---|---|---|

| Application Performance Monitoring (APM) [29] | Provides deep insights into applications, tracing transactions and mapping their paths through various services. | Used during and after testing to analyze and compare testing data against your performance baseline; essential for identifying code-level bottlenecks [29]. |

| Load Testing Tools (e.g., Apache JMeter) [30] | Simulates realistic user loads and transactions to generate system load. | Used to execute load, stress, and spike tests by simulating multiple concurrent users or systems interacting with the application [30]. |

| Profiling Tools [29] | Identifies performance bottlenecks within the application code itself. | Helps pinpoint areas of the code that consume the most CPU time or memory, guiding optimization efforts [29]. |

| Log Aggregation & Analysis (e.g., Elastic Stack) [32] | Collects, indexes, and allows for analysis of log data from all components of a system. | Crucial for troubleshooting errors and unusual behavior detected during performance tests by providing a centralized view of system events [32]. |

| Daunorubicin Citrate | Daunorubicin Citrate, CAS:371770-68-2, MF:C33H37NO17, MW:719.6 g/mol | Chemical Reagent |

| 7-Demethylpiericidin a1 | 7-Demethylpiericidin A1 | 7-Demethylpiericidin A1 is a potent NADH:ubiquinone oxidoreductase (Complex I) inhibitor for cancer research. This product is For Research Use Only. Not for human or veterinary diagnostic or therapeutic use. |

Strategic Methodologies and Practical Applications for Effective Testing

Performance testing is a critical type of non-functional testing that evaluates how a system performs under specific workloads that impact user experience [33]. For researchers, scientists, and drug development professionals, selecting the appropriate performance testing strategy is essential for validating experimental systems, computational models, and data processing pipelines. These testing methodologies ensure your research infrastructure can handle expected data loads, remain stable during long-running experiments, and gracefully manage sudden resource demands without compromising data integrity or analytical capabilities.

The strategic implementation of performance testing provides measurable benefits to research projects, including identifying performance bottlenecks before they affect critical experiments, ensuring system stability during extended data collection periods, and validating that computational resources can scale to meet analytical demands [34]. Within the context of control performance test species reporting research, these methodologies help maintain the reliability and reproducibility of experimental outcomes.

Performance Testing Types: Comparative Analysis

Quantitative Comparison of Testing Types

The table below summarizes the four primary performance testing strategies, their key metrics, and typical use cases in research environments.

Table 1: Performance Testing Types Comparison

| Testing Type | Primary Objective | Key Performance Metrics | Common Research Applications |

|---|---|---|---|

| Load Testing | Evaluate system behavior under expected concurrent user and transaction loads [33]. | Response time, throughput, resource utilization (CPU, memory) [34]. | Testing data submission portals, analytical tools under normal usage conditions. |

| Stress Testing | Determine system breaking points and recovery behavior by pushing beyond normal capacity [33] [34]. | Maximum user capacity, error rate, system recovery time [33]. | Assessing data processing systems during computational peak loads. |

| Endurance Testing | Detect performance issues like memory leaks during extended operation (typically 8+ hours) [33]. | Memory utilization, processing throughput over time, gradual performance degradation [33]. | Validating stability of long-term experiments and continuous data collection systems. |

| Spike Testing | Evaluate stability under sudden, extreme load increases or drops compared to normal usage [33]. | System recovery capability, error rate during spikes, performance degradation [33]. | Testing research portals during high-demand periods like grant deadlines. |

Performance Testing Workflow Diagram

The following diagram illustrates the logical relationship and decision pathway for selecting and implementing performance testing strategies within a research context.

Diagram Title: Performance Testing Strategy Selection Workflow

Troubleshooting Guides & FAQs

Common Performance Issues and Solutions

Table 2: Performance Testing Troubleshooting Guide

| Problem Symptom | Potential Root Cause | Diagnostic Steps | Resolution Strategies |

|---|---|---|---|

| Gradual performance degradation during endurance testing | Memory leaks, resource exhaustion, database connection pool issues [33]. | Monitor memory utilization over time, analyze garbage collection logs, check for unclosed resources [33]. | Implement memory profiling, optimize database connection management, increase resource allocation. |

| System crash under stress conditions | Inadequate resource allocation, insufficient error handling, hardware limitations [34]. | Identify the breaking point (users/transactions), review system logs for error patterns, monitor resource utilization peaks [33]. | Implement graceful degradation, optimize resource-intensive processes, scale infrastructure horizontally. |

| Slow response times during load testing | Inefficient database queries, insufficient processing power, network latency, suboptimal algorithms [34]. | Analyze database query performance, monitor CPU utilization, check network throughput, profile application code [34]. | Optimize database queries and indexes, increase computational resources, implement caching strategies. |

| Failure to recover after spike testing | Resource exhaustion, application errors, database lock contention [33]. | Check system recovery procedures, verify automatic restart mechanisms, analyze post-spike resource status [33]. | Implement automatic recovery protocols, optimize resource cleanup procedures, add circuit breaker patterns. |

Frequently Asked Questions

Q1: How do we distinguish between load testing and stress testing in research applications?

Load testing validates that your system can handle the expected normal workload, such as concurrent data submissions from multiple research stations. Stress testing pushes the system beyond its normal capacity to identify breaking points and understand how the system fails and recovers [33] [34]. For example, load testing would simulate typical database queries, while stress testing would determine what happens when query volume suddenly triples during intensive data analysis periods.

Q2: Which performance test is most critical for long-term experimental data collection?

Endurance testing (also called soak testing) is essential for long-term experiments as it uncovers issues like memory leaks or gradual performance degradation that only manifest during extended operation [33]. For research involving continuous data collection over days or weeks, endurance testing validates that systems remain stable and reliable throughout the entire experimental timeframe.

Q3: Our research portal crashes during high-demand periods. What testing approach should we prioritize?

Spike testing should be your immediate priority, as it specifically evaluates system stability under sudden and extreme load increases [33]. This testing simulates the abrupt traffic surges similar to when multiple research teams simultaneously access results after an experiment concludes, helping identify how the system behaves and recovers from such events.

Q4: What are the key metrics we should monitor during performance testing of analytical platforms?

Essential metrics include response time (system responsiveness), throughput (transactions processed per second), error rate (failed requests), resource utilization (CPU, memory, disk I/O), and concurrent user capacity [34]. For analytical platforms, also monitor query execution times and data processing throughput to ensure research activities aren't impeded by performance limitations.

Q5: How can performance testing improve our drug development research pipeline?

Implementing comprehensive performance testing allows you to identify computational bottlenecks in data analysis workflows, ensure stability during high-throughput screening operations, and validate that systems can handle large-scale genomic or chemical data processing [35] [36]. This proactive approach reduces delays in research outcomes and supports more reliable data interpretation.

Experimental Protocols & Methodologies

Standardized Testing Protocol

The following protocol provides a structured methodology for implementing performance testing in research environments:

Test Environment Setup: Establish a controlled testing environment that closely mirrors production specifications, including hardware, software, and network configurations [34]. For computational research systems, this includes replicating database sizes, analytical software versions, and data processing workflows.

Performance Benchmark Definition: Define clear, measurable performance benchmarks based on research requirements. These should include:

- Maximum acceptable response times for critical operations

- Target throughput for data processing tasks

- Resource utilization thresholds (CPU, memory, storage I/O)

- Error rate acceptability limits [34]

Test Scenario Design: Develop realistic test scenarios that emulate actual research activities:

- Data submission and retrieval patterns

- Computational analysis workloads

- Simultaneous user access patterns

- Data export and reporting operations

Test Execution & Monitoring: Implement the testing plan while comprehensively monitoring:

- Application performance metrics (response times, throughput)

- System resource utilization

- Database performance indicators

- Network activity and latency [34]

Results Analysis & Optimization: Analyze results to identify performance bottlenecks, system limitations, and optimization opportunities. Implement improvements and retest to validate enhancements [34].

Table 3: Performance Testing Tools and Resources for Research Applications

| Tool Category | Specific Tools | Primary Research Application | Implementation Considerations |

|---|---|---|---|

| Load Testing Tools | Apache JMeter, Gatling, Locust [34] | Simulating multiple research users, data submission loads, API call volumes. | Open-source options available; consider protocol support and learning curve. |

| Monitoring Solutions | Dynatrace, New Relic, AppDynamics [34] | Real-time performance monitoring during experiments, resource utilization tracking. | Infrastructure requirements and cost may vary; evaluate based on research scale. |

| Cloud-Based Platforms | BrowserStack, BlazeMeter [34] | Distributed testing from multiple locations, testing without local infrastructure. | Beneficial for collaborative research projects with distributed teams. |

| Specialized Research Software | BT-Lab Suite [37] | Battery cycling experiments, specialized scientific equipment testing. | Domain-specific functionality for particular research instrumentation. |

Implementation Recommendations

For research organizations implementing performance testing, begin with load testing to establish baseline performance under expected conditions. Progress to stress testing to understand system limitations, then implement endurance testing to validate stability for long-term experiments. Finally, conduct spike testing to ensure the system can handle unexpected demand surges without catastrophic failure.

Integrate performance testing throughout the development lifecycle of research systems rather than as a final validation step [34]. This proactive approach identifies potential issues early, reducing costly revisions and ensuring research activities proceed without technical interruption. For drug development and species reporting research specifically, this methodology supports the reliability and reproducibility of experimental outcomes by ensuring the underlying computational infrastructure performs as required.

Troubleshooting Guides & FAQs

Frequently Asked Questions

Q1: My dataset is very small. Which method is most suitable to avoid overfitting? A: For small datasets, bootstrapping is often the most effective choice. It allows you to create multiple training sets the same size as your original data by sampling with replacement, making efficient use of limited data. Cross-validation, particularly Leave-One-Out Cross-Validation (LOOCV), is another option but can be computationally expensive and yield high variance in performance estimates for very small samples [38] [39].

Q2: I am getting different performance metrics every time I run my model validation. What could be the cause? A: High variance in performance metrics can stem from several sources:

- Small Dataset Size: All resampling methods suffer from high variance when data is scarce [40].

- Inherent Variance of the Method: Bootstrapping, due to its random sampling with replacement, can produce higher variance in performance estimates compared to k-fold cross-validation [38].

- Data Splitting Strategy: Using a simple random split instead of a stratified approach for classification tasks can create folds with varying class distributions, leading to inconsistent results [41].

Q3: How do I choose the right value of k for k-fold cross-validation?

A: The choice of k involves a bias-variance trade-off. Common choices are 5 or 10.

- Lower k (e.g., 5): Results in a lower computational cost but may have higher bias (the performance estimate might be overly pessimistic).

- Higher k (e.g., 10): Provides a less biased estimate but has higher variance and is more computationally intensive. Leave-One-Out Cross-Validation (LOOCV, where k=n) is almost unbiased but has very high variance [38] [39].

Q4: My data has a grouped structure (e.g., multiple samples from the same patient). How should I split it? A: Standard random splitting can cause data leakage if samples from the same group are in both training and validation sets. You must use subject-wise (or group-wise) cross-validation [41]. This ensures all records from a single subject/group are entirely in either the training or the validation set, providing a more realistic estimate of model performance on new, unseen subjects.

Q5: What is the key practical difference between cross-validation and bootstrapping? A: The key difference lies in how they create the training and validation sets.

- Cross-validation partitions the data into mutually exclusive folds. Each data point is used for validation exactly once in a rotating manner [38].

- Bootstrapping creates new datasets by randomly sampling the original data with replacement. This means some data points may be repeated in the training set, while others (about 36.8%) are left out to form an out-of-bag (OOB) validation set [42] [38].

Troubleshooting Common Experimental Issues

Problem: Overly Optimistic Model Performance During Validation

- Potential Cause: Data leakage, often from performing preprocessing (e.g., normalization, imputation) before splitting the data.

- Solution: Always split your data first, then fit any preprocessing steps (like scalers) on the training set only before applying them to the validation set. Consider using nested cross-validation for a rigorous and unbiased evaluation, especially when also tuning hyperparameters [41].

Problem: Validation Performance is Much Worse Than Training Performance

- Potential Cause: Overfitting. The model has learned the training data too well, including its noise, and fails to generalize.

- Solution:

- Simplify the model (e.g., reduce model complexity, increase regularization).

- Gather more training data.

- Ensure your validation strategy, like bootstrapping, uses a proper out-of-bag (OOB) set or that cross-validation folds are truly independent [38].

Problem: Inability to Reproduce Validation Results

- Potential Cause: Lack of a fixed random seed for stochastic processes (e.g., random splitting, bootstrapping).

- Solution: Set a random seed at the beginning of your experiment to ensure that the data splits and model initialization are the same each time the code is run. This is crucial for reproducibility in scientific reporting [43].

Comparative Data Analysis

The following table synthesizes key findings from a comparative study that used simulated datasets to evaluate how well different data splitting methods estimate true model generalization performance [40].

| Data Splitting Method | Key Characteristic | Performance on Small Datasets | Performance on Large Datasets | Note on Systematic Sampling (e.g., K-S, SPXY) |

|---|---|---|---|---|

| Cross-Validation | Data partitioned into k folds; each fold used once for validation. | Significant gap between validation and true test set performance. | Disparity decreases; models approximate central limit theory. | Designed to select the most representative samples for training, which can leave a poorly representative validation set. Leads to very poor estimation of model performance [40]. |

| Bootstrapping | Creates multiple datasets by sampling with replacement. | Significant gap between validation and true test set performance. | Disparity decreases; models approximate central limit theory. | |

| Common Finding | Sample size was the deciding factor for the quality of generalization performance estimates across all methods [40]. | An imbalance between training and validation set sizes negatively affects performance estimates [40]. |

Comparison of Core Methodologies

This table provides a direct comparison of the two primary data splitting methods, cross-validation and bootstrapping [38].

| Aspect | Cross-Validation | Bootstrapping |

|---|---|---|

| Definition | Splits data into k subsets (folds) for training and validation. | Samples data with replacement to create multiple bootstrap datasets. |

| Primary Purpose | Estimate model performance and generalize to unseen data. | Estimate the variability of a statistic or model performance. |

| Process | 1. Split data into k folds.2. Train on k-1 folds, validate on the remaining fold.3. Repeat k times. | 1. Randomly sample data with replacement (size = n).2. Repeat to create B bootstrap samples.3. Evaluate model on each sample (using OOB data). |

| Advantages | Reduces overfitting by validating on unseen data; useful for model selection and tuning. | Captures uncertainty in estimates; useful for small datasets and assessing bias/variance. |

| Disadvantages | Computationally intensive for large k or datasets. | May overestimate performance due to sample similarity; computationally demanding. |

Experimental Protocols

Protocol 1: Implementing k-Fold Cross-Validation

This protocol is ideal for model evaluation and selection when you have a sufficient amount of data [41] [38].

- Define

k: Choose the number of folds (common values are 5 or 10). - Shuffle and Split: Randomly shuffle the dataset and partition it into

kfolds of approximately equal size. For classification, use stratified splitting to preserve the class distribution in each fold [41]. - Iterate and Validate: For each unique fold:

- Designate the current fold as the validation set.

- Designate the remaining

k-1folds as the training set. - Train your model on the training set.

- Evaluate the model on the validation set and record the performance metric(s).

- Aggregate Results: Calculate the average and standard deviation of the performance metrics from the

kiterations. The average is the estimate of your model's generalization performance.

Pseudo-Code:

Protocol 2: Implementing the Bootstrap Method

This protocol is excellent for assessing the stability and variance of your model's performance, especially with small datasets [42] [44].

- Define

B: Choose the number of bootstrap samples to create (often 1000 or more). - Generate Bootstrap Samples: For each of the

Biterations:- Create a bootstrap sample by randomly drawing

nsamples from the original dataset with replacement, wherenis the size of the original dataset.

- Create a bootstrap sample by randomly drawing

- Train and Evaluate: For each bootstrap sample:

- Train your model on the bootstrap sample.

- Evaluate the trained model on the out-of-bag (OOB) data—the samples not included in the bootstrap sample. This OOB evaluation provides a validation score.

- Aggregate Results: Calculate the average of the OOB performance metrics across all

Biterations. The standard deviation of these metrics provides an estimate of the performance variability.

Pseudo-Code:

Method Selection & Workflow Visualization

Decision Framework for Method Selection

The following diagram illustrates the logical process for selecting the most appropriate data splitting method based on your experimental goals and dataset characteristics.

Cross-Validation Workflow

This diagram details the step-by-step workflow for conducting a k-fold cross-validation experiment.

The Scientist's Toolkit: Research Reagent Solutions

This table details key computational tools and concepts essential for implementing robust data splitting methods in control performance test species reporting research.

| Item / Concept | Function & Application |

|---|---|

| Stratified Splitting | A modification to k-fold cross-validation that ensures each fold has the same proportion of class labels as the entire dataset. Critical for dealing with imbalanced datasets in classification problems [41] [38]. |

| Nested Cross-Validation | A rigorous method that uses an outer loop for performance estimation and an inner loop for hyperparameter tuning. It prevents optimistic bias and is the gold standard for obtaining a reliable performance estimate when tuning is needed [41]. |

| Out-of-Bag (OOB) Error | The validation error calculated from data points not included in a bootstrap sample. In bootstrapping, each model can be evaluated on its OOB samples, providing an efficient internal validation mechanism without a separate hold-out set [42] [38]. |

| Subject-Wise Splitting | A data splitting strategy where all data points from a single subject (or group) are kept together in either the training or validation set. Essential for avoiding data leakage in experiments with repeated measures or correlated data structures [41]. |

| Random Seed | A number used to initialize a pseudo-random number generator. Setting a fixed random seed is a crucial reproducibility practice that ensures the same data splits are generated every time the code is run, allowing for consistent and verifiable results [43]. |

| Tiprenolol Hydrochloride | Tiprenolol Hydrochloride, CAS:39832-43-4, MF:C13H22ClNO2S, MW:291.84 g/mol |

| 16-Methyloxazolomycin | 16-Methyloxazolomycin, MF:C36H51N3O9, MW:669.8 g/mol |

This technical support center provides troubleshooting guides and FAQs for researchers, scientists, and drug development professionals establishing a QA program within the context of control performance test species reporting research.

Troubleshooting Guides and FAQs

Test Plan Development

Q: What are the essential components of a test plan for a study involving control test species? A: A robust test plan acts as the blueprint for your entire testing endeavor. For studies involving control test species, it must clearly define the scope, objectives, and strategy to ensure the validity and reliability of the data generated [45]. The key components include:

- Release Scope & Objectives: Define the specific features, functions, and test species included in the release. State the reason for testing, such as verifying that the control species responds to the test compound in a predictable and reproducible manner [45].

- Schedule & Milestones: Establish a realistic timeline with key phases for planning, test execution, and bug triage [45].

- Test Strategy & Logistics: Document the testing types (e.g., functional, performance), methods (manual vs. automated), and resource allocation (who, what, when) [45] [46].

- Test Environment & Data: Plan the hardware, software, and network configurations. Crucially, prepare the test data, which can be created manually, retrieved from a production environment, or generated via automated tools [45].

- Test Deliverables: List all outputs, such as the test plan document, test cases, test logs, defect reports, and a final test summary report [45].

- Suspension & Exit Criteria: Define conditions to pause testing and the predetermined results (e.g., 95% of critical test cases pass) required to deem a testing phase complete [45].

Q: A key assay in our study is yielding inconsistent results with our control species. What should we investigate? A: Inconsistent results can stem from multiple factors. Follow this troubleshooting guide:

- Review Test Data: Verify the integrity, storage conditions, and preparation methods of the compounds administered to the control species. Ensure test data was created or selected to satisfy execution preconditions [45].

- Audit the Test Environment: Check for deviations in the controlled environment (e.g., temperature, humidity, light cycles) that could affect the species' physiological response. The test environment must have stable, documented hardware and software configurations [45].

- Check Instrument Calibration: Confirm that all laboratory instruments and data collection systems are properly calibrated and maintained. This is part of ensuring "facilities and equipment" are suitable for their purpose, as checked in a GMP audit [47].

- Assess Personnel Training: Ensure all technicians performing the assays are consistently trained and follow the same documented procedures. A GMP audit would review personnel training records and competence [47].

- Analyze the Protocol: Scrutinize the experimental protocol for any ambiguities or uncontrolled variables that could introduce variation.

Audit Procedures

Q: Why is auditing a Contract Development and Manufacturing Organization (CDMO) critical when they are supplying materials for our control test species? A: The sponsor of a clinical trial is ultimately responsible for the safety of test subjects and must ensure that the investigational product, including its constituents, is manufactured according to Good Manufacturing Practice (GMP) [47]. An audit is the primary tool for this. Key reasons include:

- Risk Mitigation: It uncovers shortcomings in the CDMO's procedures, training, or equipment that could compromise the quality, safety, or efficacy of the materials supplied for your research [47].

- Regulatory Compliance: It fulfills the sponsor's oversight obligation, demonstrating to regulators that you have ensured your GMP suppliers meet requirements. Without an audit report, a Qualified Person (QP) may decline to release the product for use [47].

- Collaboration & Understanding: The on-site visit allows for comprehensive discussions about the project and provides a deeper understanding of the manufacturer’s processes, which benefits future collaboration [47].

Q: What are the main stages of a pharmaceutical audit for a vendor supplying our control substances? A: The pharmaceutical audit procedure is a structured, multi-stage process [48]:

- Audit Planning: Defining the scope, objectives, and schedule.

- Audit Preparation: Gathering relevant documentation and forming the audit team.

- Audit Execution (On-site or Remote): Conducting the audit through interviews, facility inspections, and document review. This assesses key areas like the quality system, personnel, facilities, production, and quality control [47].

- Audit Reporting: Compiling findings and suggestions for improvement in a formal audit report [47].

- Corrective and Preventive Actions (CAPA): The vendor addresses the findings with a CAPA plan. The auditor reviews and closes the audit once satisfied with the corrective actions [47].

- Audit Follow-up: Verifying the effectiveness of implemented CAPAs.

Q: Our internal audit revealed a documentation error in the handling of a control species. What steps must we take? A: This situation requires immediate and systematic action through a CAPA process:

- Containment: Immediately quarantine any affected samples or data and assess the immediate impact.

- Documentation: Log the event as a deviation in your quality management system.

- Root Cause Analysis: Investigate to find the fundamental reason for the documentation error (e.g., unclear procedure, training gap, process flaw).

- Corrective Action: Address the immediate issue, such as re-running the affected tests with proper documentation.

- Preventive Action: Implement changes to the system to prevent recurrence, such as revising SOPs, enhancing training, or introducing an automated documentation check.

- Verification of Effectiveness: Monitor the process to ensure the preventive action is working.

Data Quality Objectives